Each year, an estimated 250,000 people die in the U.S. due to medical error.

AI could help to reduce that number.

“This is a significant problem to solve,” says Fei-Fei Li, Stanford HAI co-director and professor of computer science. “AI can address it directly,” for example, by monitoring procedures and home care and helping to triage patients.

That’s just one of the takeaways from “Healthcare’s AI Future,” a virtual fireside chat co-hosted by HAI and DeepLearning.AI. Li joined Andrew Ng, co-founder of DeepLearning.AI and a Stanford adjunct professor, along with moderator Curtis Langlotz, Stanford professor of radiology and director of Stanford’s Center for Artificial Intelligence in Medicine and Imaging (AIMI), for a wide-ranging discussion on AI’s value to the health care field, implementation hurdles, and bias concerns.

“Health care is of such importance to humanity as a whole,” Li said. “AI technology can help improve this critical sector.”

Ng agreed. “So much medical data is just sitting there, waiting for possible applications. I got into this space to create real opportunities for impact.”

Here are key takeaways from their discussion. (Or watch the full conversation.)

The Challenge of Implementation

While the promise of applying AI in health care is large, implementation is not easy. That helps explain the limited number of AI models in clinical use today.

For example, an AI decision-making model that performs well in one medical context may not translate well to another, Ng noted. “If you take a research-based model, including my recent ones, and try to apply it in a different hospital than the one that provided the original training data, the ‘data drift’ will cause the model’s performance to degrade significantly.”

This issue isn’t unique to health care. “Bridging from proof-of-concept to implementation is a common challenge across industries,” Ng said. “We build fantastic AI tools, but deploying them requires significant work beyond their initial training.”

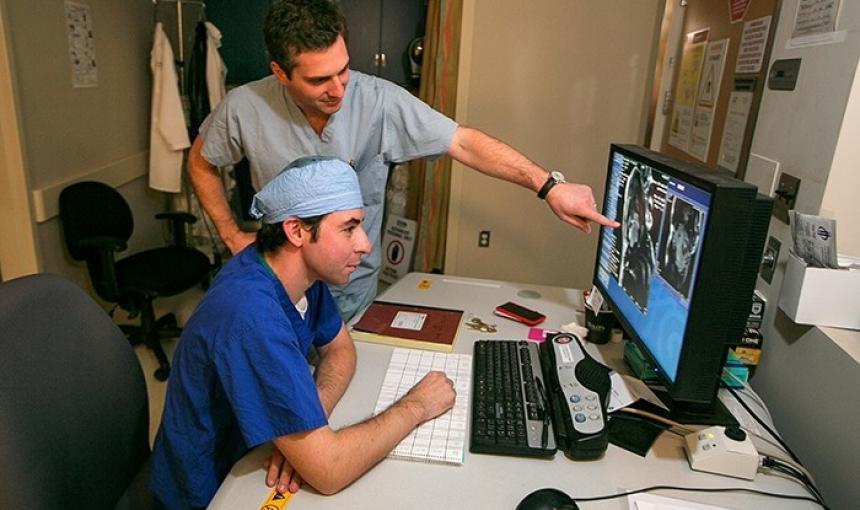

Li, whose research includes a focus on “ambient intelligence”—using AI to monitor and respond to human activity in homes, hospitals, and other environments—faces a different challenge: finding data to build effective systems, such as those that could help reduce ICU patients’ risk of falling from their beds.

“We have zero data in this area,” Li said. “We’re working to combine smart sensors and AI algorithms to illuminate this dark space and understand all the human factors involved—just like for self-driving car technology.”

The Need for Collaboration—and Humility

Health care is a multi-stakeholder arena. Driving positive change with AI and other technologies requires a collaborative, humble approach.

“Almost all my work is collaborative,” Li said. “We scientists have to appreciate that working in health care is about people’s lives, and that means having humility and a willingness to learn.” For example, she has learned a lot about clinical challenges from collaborators like Stanford professor of medicine Arnie Milstein. “What he has shown me has helped humanize what we do.”

To ensure students joining her AI health care team understand the human context, Li asks them to “close their laptops and shadow doctors on the team, especially in the ICU—they see the human vulnerability of this space, how tiring a nursing shift is, what families go through—then we talk about how to solve problems there.”

Ng’s collaborators from different sectors have helped him prioritize health care areas of focus. “I used to jump in to the first area I was excited about. Now, I sit down with cross-functional partners to understand the relative value of different projects.”

That cross-functional perspective was especially important when Ng’s team created a model to make palliative-care recommendations. “We had to ensure the system respected the patient and reflected empathy,” Ng says, pointing for example to the model’s creation of estimates of patient risk of dying in the next 12 months. At the same time, the model’s outputs “injected data into a hierarchical system and created space for others’ inputs, such as empowering nurses to raise issues with senior physicians.”

Preventing Bias

Li and Ng emphasize the importance of recognizing potential bias in AI technology and taking steps as a team to prevent it.

“We sit down with a multi-stakeholder team and brainstorm everything that could wrong, to prevent bias,” Ng said. “We use metrics to analyze data and audit the system, keeping an open mind to the idea that we might miss something along the way and reacting to new data.”

Li added, “The most important thing is to recognize the human responsibility here. I cringe when the media says, ‘AI is biased.’ That puts the responsibility on the machine, not people. There are no independent machine values. So, before you write a line of code, you have to gather data and get ethicists, patients, nurses, and doctors in a room to discuss potential issues including bias.”

The Future of AI and Health Care

The scope of application for AI in health care is broad, with many challenges to address.

To prevent deaths due to medical errors, AI-based technologies can help ensure procedures are carried out as intended and can be part of a system that triages patients for treatment and monitors those in home care more effectively. “AI will help us save lives and money,” Li said.

Ng pointed to multiple additional areas of opportunity. “Mental health is a big one, as we need more scalable tools in this space. Diagnostics is another. Many countries don’t have great radiology options, so it’s a good place to apply AI, especially in developing regions. And we can’t forget the operational side of health care: how to schedule patients and nursing shifts, how to reduce costs in the system. That’s where AI can be of service as well.”

To make the most of AI in health care, though, we need to resolve ongoing challenges in this space. “The software needs to get better, and we need to improve machine learning operations,” Ng said. “Progress is happening, but slowly.”

“We need proven AI products and stories, as in radiology, where the technology serves both patients and clinicians by improving radiologists’ accuracy,” Li said. “Such impact can lead to a watershed moment. In the end, it’s about a human-centered approach: Health care is ultimately about human dignity, and we can use AI to help uphold that principle.”

Stanford HAI's mission is to advance AI research, education, policy and practice to improve the human condition. Learn more.