An ESR creates a path for researchers to consider the wider ramifications of their work. | Linda A. Cicero

Too often, we understand artificial intelligence’s negative impact after implementation: a hiring AI that rejects women’s resumes, loan-approval AI biased against low-income earners, or racist facial recognition technologies. What if researchers acted on potential harm earlier in the process?

For the first time at Stanford University, a new program is requiring AI researchers to evaluate their proposals for any potential negative impact on society before being green-lighted for funding.

The Ethics and Society Review (ESR) requires researchers seeking funding from the Stanford Institute for Human-Centered Artificial Intelligence (HAI) to consider how their proposals might pose negative ethical and societal risks, to come up with methods to lessen those risks, and, if needed, to collaborate with an interdisciplinary faculty panel to ensure those concerns are addressed before funding is received.

The goal, says Michael Bernstein, associate professor of computer science and the project co-lead, is to encourage AI researchers to thoughtfully consider the societal impact of their work and minimize risks in the initial stages of the research process. Despite the fact that AI continues to be implicated in social ills ranging from the spread of disinformation to unequal outcomes in law enforcement and health care, there are still few resources to help with this; AI research often falls outside the purview of existing entities such as the Institutional Review Board, which is designed to evaluate harm to individuals, rather than society.

“Unlike other professions such as law and medicine that have a history of institutional processes for considering ethical issues, computing doesn’t really have a strong institutional response; it lacks any sort of widely applied ethical or societal review,” Bernstein says. “So how do we get people to engage in this? The ESR’s main insight was that we have one point of leverage, which is when researchers are applying for internal funding. Our idea was to work with the grantor so that funding isn’t released until the teams have completed an ethics and society review.”

Launching the HAI Pilot

Bernstein and the ESR team — Debra Satz, the Vernon R. and Lysbeth Warren Anderson Dean of the School of Humanities and Sciences; Margaret Levi, director of the Center for Advanced Study in the Behavioral Sciences (CASBS) and co-director of the Stanford Ethics, Society and Technology Hub; and David Magnus, the Thomas A. Raffin Professor of Medicine and Biomedical Ethics — took the idea to James Landay, professor of computer science and HAI associate director, in the hopes of piloting the concept on researchers seeking funding from HAI.

“When Michael reached out to me about this, I said we should do it,” Landay says. “It’s been baked into HAI from the start that we want to make sure we’re looking at the impact of AI on society, and we think that has to occur at the beginning of creating new AI technologies, when you can have the most impact on people’s research, not after these ideas have turned into research papers and eventually into products. The problem is that we don’t have a mechanism for doing that, other than saying, ‘Hey, we think it’s important.’ The advantage of this idea is that it forces people to think about these issues at that early stage.”

The one-year pilot program began in spring 2020, and it asked researchers seeking HAI grant funding to submit within their proposal a simple ESR statement that identified their project’s potential risks to society and outlined plans to mitigate those risks. After HAI completed its typical merit review, the ESR began its process with 41 grants that HAI had recommended for funding, which included both larger Hoffman-Yee grants and smaller seed grants. Each proposal and ethical impact statement was reviewed by two members of the ESR’s interdisciplinary faculty panel, which included expertise from fields including anthropology, computer science, history, management science and engineering, medicine, philosophy, political science, and sociology.

About 60 percent of those projects received written ESR feedback and were cleared to receive funding. The remaining 40 percent of research teams iterated at least once with the ESR. All the proposals eventually were approved for funding.

Deepening Conversations on Ethical Concerns

One of the research teams taking part in the pilot was led by Sarah Billington, professor of civil engineering and a senior fellow at the Stanford Woods Institute for the Environment. Her research proposal focused on human-centered building design for the future that uses data collection and machine learning to sense and respond to the well-being of occupants within a structure, including adapting the building systems and digital environments to support and enhance occupant experiences.

“A major part of our research agenda centers on developing a platform that respects and preserves occupant privacy, and this is what the ESR board picked up on,” Billington says. “The review team had some significant concerns along those lines, wondering if the eventual platform could be used by various actors in a ‘Big Brother’ way to monitor employees through their personal information, or to focus solely on productivity.”

The ESR team also expressed concern that the building’s sensor systems could be misused by future employers in the building, a scenario the team hadn’t thoroughly considered, Billington says. Her team iterated with the reviewers to clarify the project’s intent and its privacy safeguards and is now considering new ways of aggregating and anonymizing the data. They’ve also expanded their research around privacy, exploring how individuals do — and do not — want their data used and how comfortable they are sharing that information.

“The ESR deepened the conversations around privacy that we were already having,” Billington says. “The other thing they suggested — which I hadn’t really thought about — was for us to become advocates for ethics in our project. So the next time I gave a talk, for example, I used that platform to emphasize the need for privacy-preserving research in our work. I hadn’t thought so much before how as a researcher I can elevate these conversations around ethics with my voice.”

New Strategies Lead to New Designs

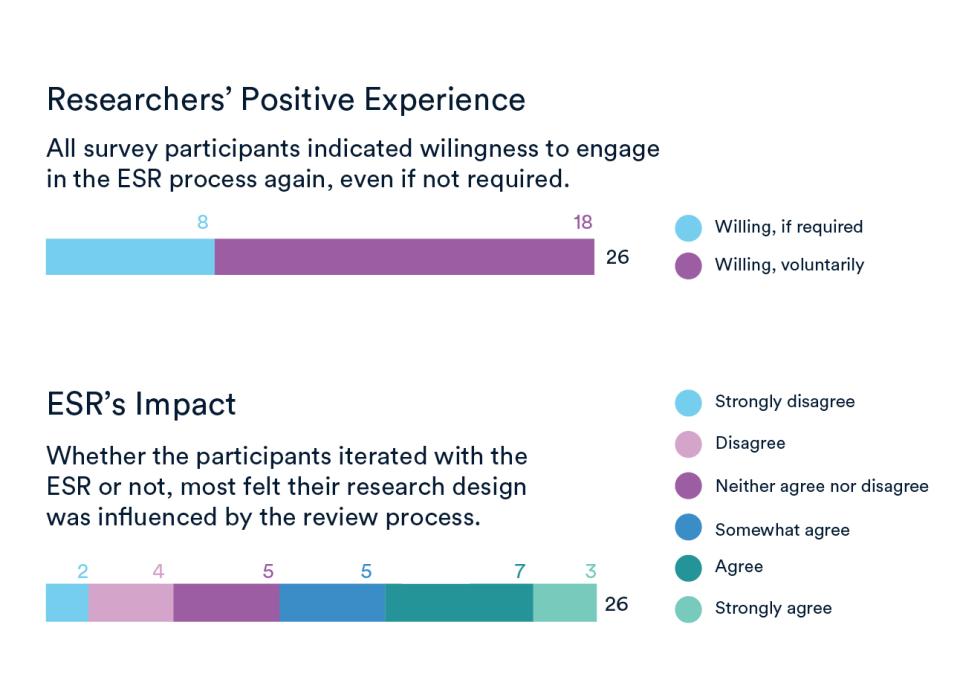

Surveys conducted following the pilot showed that of those responding, 67 percent of those who iterated with the ESR — and more than half of all researchers — felt the ESR process had influenced their research design. Moreover, reviewers identified ethical and societal risks not mentioned by researchers in 80 percent of the projects that iterated with the ESR. Every survey respondent was willing to engage in the ESR process again.

Those same surveys showed that researchers — some of whom had little experience thinking deeply about ethical issues — appreciated the structure of the ESR process and requested even more guidance in future reviews.

“The scaffolding was the biggest benefit researchers reported from undergoing the ESR process,” said Bernstein. “They said we forced them to stop and consider these issues and gave them some strategies for how to think about it that they didn’t necessarily have before.”

Raising Questions for Better Scientific Discovery

The ESR program aims to continue growing the program — initially to other parts of the Stanford campus beyond AI, where it could help guide researchers working in areas such as sustainability or electoral research. The ESR team also anticipates the model — which they hope to evolve to focus more on coaching rather than simple review — could easily be adopted at other universities and, perhaps in some form, in industry, where well-intentioned AI developers sometimes don’t know the ethical questions to ask.

Importantly, the ESR is not designed to limit research, to make all proposals risk-free, or to remove all negative impacts from AI development, says ESR team member Margaret Levi. “The ESR raises the questions; the decisions are ultimately up to the individual researcher,” Levi says. “What’s important is that researchers have a good reason for making their decisions and some strategy for mitigating those consequences that are able to be mitigated. What this does is create better scientific discoveries by thinking about what the downstream consequences could be ahead of time. It helps at the front end of research and can also help people down the line, as they make discoveries that turn out to have unintended consequences that couldn’t be anticipated ahead of time.”

The ESR will continue to be a part of HAI grant funding for the foreseeable future, says Landay.

“We think this is pretty innovative, and the results so far have been more positive than any of us would have expected; we’re only hearing enthusiasm for the program,” he says. “Our hope is to really get the model out there so others can see what’s possible and hopefully replicate it at their own universities. I think we’re surely going to see a model of this kind in one form or another start to become the norm in AI development.”

The ESR won’t answer all the ethical and societal issues inherent in AI, but could be a valuable tool for researchers and developers in the field, Landay says.

“There still has to be political will for the policies, laws and other approaches that can limit the negative impact of harmful technology, but this program is changing the conversation, how people are educated, and the culture,” he says. “This isn’t a solution, and it won’t solve all our problems. But it’s a piece of the puzzle that gets us to a better place.”

This work was supported by the Public Interest Technology University Network; Stanford’s Ethics, Society, and Technology Hub; and the Stanford Institute for Human-Centered Artificial Intelligence.