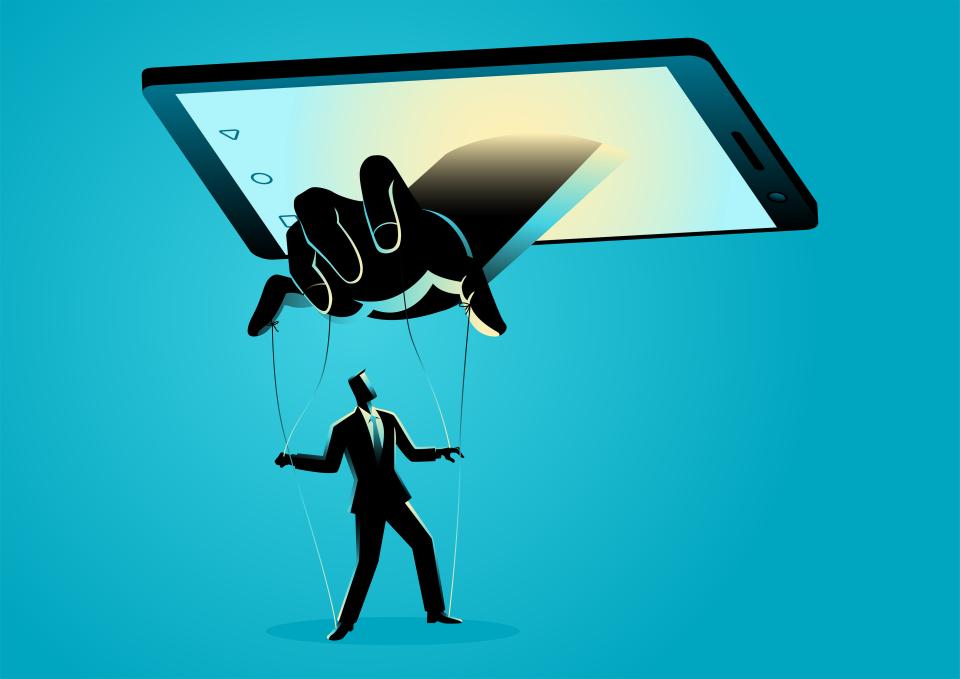

The Disinformation Machine: How Susceptible Are We to AI Propaganda?

iStock

Michael Tomz, a professor of political science at Stanford School of Humanities and Sciences and faculty affiliate at the Stanford Institute for Human-Centered AI (HAI), recently gave a talk in Taiwan about the use of AI to generate propaganda. That morning, he recalled, he saw a headline in the Taipei Times reporting that the Chinese government was using AI-generated social media posts to influence voters in Taiwan and the United States.

“That very day, the newspaper was documenting the Chinese government doing exactly what I was presenting on,” Tomz said.

AI propaganda is here. But is it persuasive? Recent research published in PNAS Nexus and conducted by Tomz, Josh Goldstein from the Center for Security and Emerging Technology at Georgetown University, and three Stanford colleagues—master’s student Jason Chao, research scholar Shelby Grossman, and lecturer Alex Stamos—examined the effectiveness of AI-generated propaganda.

They found, in short, that it works.

When Large Language Models Lie

The researchers conducted an experiment, funded by HAI, in which participants were assigned to one of three groups.

Read the full study, How persuasive is AI-generated propaganda?

The first group, the control, read a series of thesis statements on subjects that known propagandists want people to believe. “Most U.S. drone strikes in the Middle East have targeted civilians, rather than terrorists,” for instance. Or, “Western sanctions have led to a shortage of medical supplies in Syria.” Because this group read only these statements and no propaganda related to them, it provided the researchers with a baseline understanding of how many people believe these claims. The second group of participants read human-crafted propaganda that was written on the subject of the thesis statements and later uncovered by investigative journalists or researchers. The third group was given propaganda on the same topics as groups one and two that had been generated by large language model GPT-3.

The researchers found that about one quarter of the control group agreed with the thesis statements without reading any propaganda about them. Propaganda written by humans bumped this up to 47 percent, and propaganda written by AI to 43 percent.

“We then tweaked the AI process by adding humans into the loop,” Tomz said. “We had people edit the input—the text used to prompt the language model—and curate the output by discarding articles that failed to make the intended point.” With these additional steps, nearly 53 percent of people agreed with the thesis statements after reading the propaganda, a greater effect than when the propaganda was written by humans alone.

How Broad An Impact?

The immediate implications of persuasive AI-generated propaganda are not obvious. “It’s important to keep in mind that we looked at foreign policy issues, where people may not be especially knowledgeable or have their minds made up,” Goldstein said. “AI-generated propaganda might move opinions less when we’re talking about something like a voter’s preferred candidate in an election in a two-party system,” in which most people have firmly made up their minds.

That said, Tomz noted that elections, along with many other issues, are often decided on the margins, which means that small effects can ultimately prove consequential. On top of that, the results they found are, for several reasons, probably an underestimate of how persuasive AI-generated propaganda might be.

For one, the experiment was conducted when GPT-3 was the leading technology among language models. If the researchers ran the study again today with a newer model, like GPT-4, the AI-generated propaganda would likely be more persuasive and require less human intervention.

The authors also had people read a single passage of propaganda; but propaganda is more effective through repeated exposure, which AI-generated text makes possible for virtually no cost. The efficiency of having AI write propaganda also frees up humans to spend resources on other fronts in a campaign, like creating fake social media accounts.

Perhaps of greatest concern, though, is the rise of AI-generated audio and visual content—a technology that was easy to detect when this experiment was conducted, but which is rapidly becoming hard to distinguish from reality.

There are individual and regulatory measures that can be put in place to help mitigate the potential dangers of AI-generated propaganda. Technical methods that social media companies use for detecting fake accounts may work just as well even if posts are created by AI, for example, and interventions around traditional media literacy could help people discern real from fake news.

Still, Tomz expressed grave concern about the next frontier of propaganda.

“Deepfakes are probably more persuasive than text, more likely to go viral, and probably possess greater plausibility than a single written paragraph,” he said. “I’m extremely worried about what’s coming up with video and audio.”

Stanford HAI’s mission is to advance AI research, education, policy and practice to improve the human condition. Learn more.