Fei-Fei Li: A Candid Look at a Young Immigrant’s Rise to AI Trailblazer

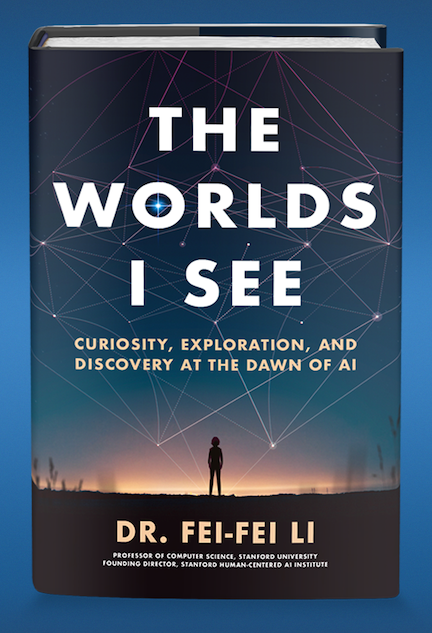

Fei-Fei Li's new book "Worlds I See" reveals one young immigrant's rise to a leader in AI.

Stanford HAI Co-Director and computer scientist Fei-Fei Li’s path to becoming a leading voice in AI was not linear. As a young teenager, she boarded a plane with her family from China to New Jersey with less than $20 to make a new life in America. She struggled to learn English while keeping up in school, and spent her free time working in restaurants and at her parents’ drycleaning business to help the family stay afloat. Her full scholarship to Princeton came as such a shock that she asked two different high school advisors to review the acceptance letter.

After Li’s early focus on physics, her time as a PhD at California Institute of Technology (Caltech) inspired new interest in computer vision and neuroscience. That led to her groundbreaking project ImageNet—the largest database of images ever created, 15 million images spread across 22,000 distinct categories—which set the stage for major advances in AI and neural nets.

Today Li leads the Stanford Institute for Human-Centered AI and, in her expert role, sits on a UN AI advisory board, meets with the White House, and is promoting an AI agenda that prioritizes human impact.

In her new book, The Worlds I See, Li parallels her own path with that of the hyper-advances in the field of AI. In this conversation, she discusses her immigrant path, ImageNet tribulations, and why she is continually hopeful about her field.

In her new book, The Worlds I See, Li parallels her own path with that of the hyper-advances in the field of AI. In this conversation, she discusses her immigrant path, ImageNet tribulations, and why she is continually hopeful about her field.

Your story is the American dream - a young immigrant who succeeds through talent and perseverance. How has your immigrant story shaped your research?

Every bit of my journey lends itself to my research. Being a scientist is about resilience because science is exploring the unknown, just as an immigrant is exploring the unknown. In both, you are on an uncertain journey and you have to find your own North Star. I actually think this is why I wanted to do human-centered AI. The immigration story, the drycleaning business, my parents’ health — the journey I've been through is so deeply human. That gives me a lens and a perspective that is uniquely different compared to a kid who maybe had a more anchored upbringing and a computer since age 5.

In many ways, your book feels like a tribute to mentors - a high school math teacher, PhD advisors, several AI pioneers. Why are these people so important in your journey?

They saw something in me before I saw it myself, as a woman of color. I don't walk around with a huge ego, and it's hard to be one of the few women, or the only woman, in technology. These mentors supported me and saw in me things I didn't see myself.

Most know you as the founder of ImageNet. You discuss how incredibly difficult it was to build this, the many snags you encountered, even some discouragement from respected mentors. Why did you continue?

Delusion? Maybe it’s the same kind of unbelievable conviction that took my parents to America. I think about that: They didn't speak a word of English, they didn’t have more than $20 between them, they didn’t have an education or a social support network here. Why are they here? When I was younger, I didn’t appreciate that. But maybe that passion is my inheritance, something that helped me find my own North star.

You note you have seen an exodus of students and faculty leaving the research lab for AI companies. Is this common with major technological advances, and does it worry you?

Yes, we’ve seen this before. Just one example in computer science is hardware architecture design — we’ve seen that shift to industry. I don’t necessarily think this is bad. But if the imbalance is too strong, then the implication is profound. Universities train talent, and they’re always oriented to the public good rather than profit. And that healthy balance between blue sky public good, thought leadership, and deep technology is needed in this society. But I don’t know how imbalanced we are right now. I don’t think the generative AI cycle has played out fully.

You call AI disruptive, revolutionary, a puzzle, a force of nature. But you finally describe it as “a responsibility.” What do you mean by that?

It's recognizing the future of AI is so profoundly impactful that the agency must remain within us. We have to make the choices of how we want to build and use this technology. If we give up agency, it would be a freefall.

Why do you have so much hope for AI?

I see the younger generation stepping up as much more multidisciplinary thinkers and doers. They are enamored by AI, but they are also embracing the ethical conversations - looking at it from a climate lens, for example, and thinking about fairness and bias. My generation was much more naive and undereducated, to be honest.

If you could ensure people finish this book with one main message, what is it?

This book is about finding my North star, in computer vision and human-centered artificial intelligence. I want to inspire others to find their North stars. Anyone could be an AI leader. If you’re an immigrant, if you’re a woman, if you have a life struggle. I don’t have the typical “tech bro” profile. And I want women, people of color, people with struggles or who come from different backgrounds to be able to see they can define their own paths and find their own North stars.

Stanford HAI’s mission is to advance AI research, education, policy and practice to improve the human condition. Learn more.