A Simulated Playground for Robots

Stanford’s iGibson offers safe and realistic training for intelligent agents.

Clever robots may one day roam our homes putting away the dishes, folding the laundry, sweeping the kitchen floor, and setting the table. By doing everyday tasks, these intelligent machines could improve the quality of life for seniors, people with disabilities, and overwhelmed families, says Roberto Martin-Martin, a postdoctoral scholar at Stanford University’s Vision and Learning Lab.

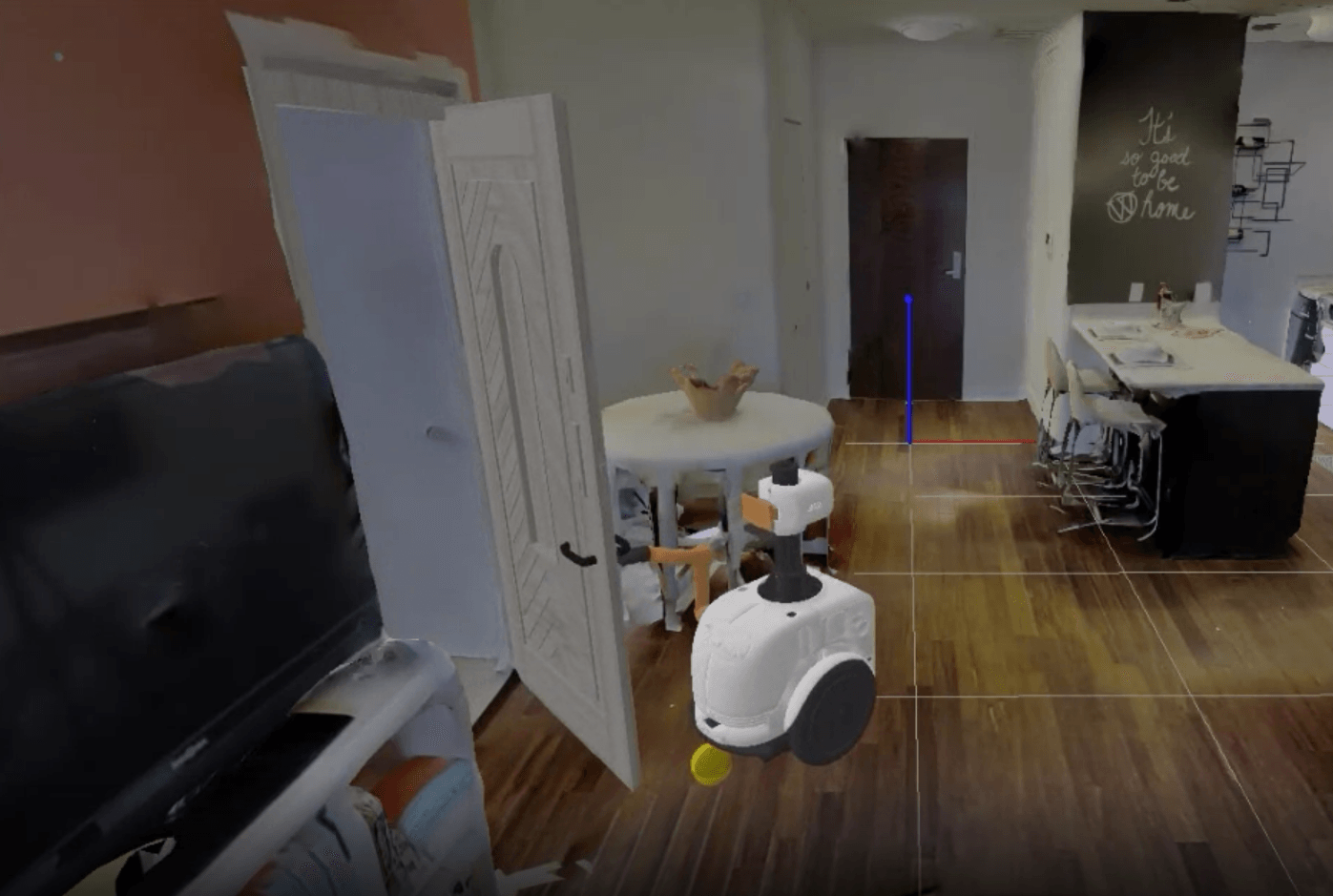

But training robots to safely navigate a home environment, let alone open a door or fold a towel, is challenging. When they are first learning, robots will smash dishes, crash into walls, and overturn furniture, not to mention damage themselves along the way. To address this problem, Stanford researchers have developed iGibson, a realistic, large-scale, and interactive virtual environment within which a robot model can explore, navigate, and perform tasks.

“iGibson is about creating the right playground for robot learning,” says Martin-Martin, who works on the iGibson project alongside Stanford computer science professors Silvio Savarese and Fei-Fei Li, as well as graduate students Fei Xia, Lyne Tchapmi, and Will Shen.

Some robots trained in iGibson have already proven their chops in real world navigation. And iGibson’s interactive features will soon be tested in the real world as part of the Sim2Real Challenge, in which researchers train their robot algorithms in iGibson and then test the robots’ smarts in a real apartment at Stanford. “It’s a large-scale experiment in how well people can train in simulation and test in real world navigation and interaction with real world obstacles,” Martin-Martin says. Contest results will be final in June.

“Our goal is not to create a new robotic algorithm,” Savarese says, “but to create the environment where robots controlled by various algorithms can be trained and tested. Then those that are successful in iGibson can be validated in the real world.”

Realistic and Generalizable

In the past, simulated learning spaces for robots have often been unrealistic, with sparse furniture arranged as if in a home staged for sale. A robot trained to perform a task in such a pristine environment will be confused when it enters the messy, cluttered real world, Savarese says.

To create realistic, large-scale virtual environments in iGibson, the Stanford team used a 3D scanner to create photorealistic models of more than 750 buildings, each mirroring the structures’ overall layout as well as the clutter of furniture and other objects.

Virtual environments for robot learning also need to be large scale, Savarese says. That’s why iGibson includes 750+ buildings. “The goal is to achieve something that is critical for all intelligent agents, which is generalization,” he says. “Robots have to learn to perform tasks, but they also have to learn to generalize and transfer that information to a different setting.”

For example, Savarese says, if a robot visits (in iGibson) the virtual Gates building at Stanford and is told to find the bathroom, it will learn how to navigate the corridors of that specific building, find a specific colored bathroom door, and open it using a certain type of door handle. But what will it do in a different building with different architecture, lighting, wall colors, and doorknobs? “It will have an existential crisis,” Savarese says. “It won’t recognize objects, won’t find its way, won’t be able to open the doors.” But let the robot learn in all of the buildings at Stanford and then put it in a new building it has never seen before, and it will most likely find the bathroom.

“If you don’t have training environments that are realistic and generalizable because there are lots of them, then the robots aren’t going to learn what they need to know in order to function in the real world,” Martin-Martin says.

Learning Tasks

Interactivity provides the “i” that turns Gibson, which is named for psychologist and visual perception scholar James Gibson, into iGibson. Many of the buildings in the training environment are interactive: Robots can push and pull furniture and other objects, open doors and cabinets, and grasp and carry objects. Through these interactions, robots can learn the relationship between the visual and physical properties of objects, such as those made of wood or metal. In a sense, iGibson allows the robot to learn what humans call common sense, such as how objects will behave in response to certain forces.

“Instead of telling the robot what objects to interact with, which takes a lot of annotation of the data by humans,” Savarese says, “we just generate the environment where robots can learn the properties of objects by itself, which is very powerful.”

Going forward, the team hopes to add more interactive objects to iGibson, including clothes that can be folded, water taps and light switches that can be turned on or off, as well as vegetables to chop and bread to slice. This will allow robots to complete more interesting tasks, Martin-Martin says, and the more tasks a robot can perform in iGibson, the better it will be prepared for the real world.

The team has also added the option for robot-to-robot interaction in iGibson, and would like to expand that to include interaction with humans. “iGibson could become a playground for not only solving tasks as a solo activity but also in collaboration with other robots or with humans,” Martin-Martin says. “The idea is not that a robot should ‘do everything for you always,’ but rather be an enabler for you to do everything you want to do at home.”

iGibson is already being adopted by other researchers (it is open source and has been downloaded more than 50 times), which should create a catalytic effect for new research. And because robots will be trained much faster using iGibson, it should speed the development of domestic assistants to help people with everyday tasks.

“If we start to see robots that are able to go into big, realistic, fully interactive simulated environments,” Martin-Martin says, “that will be a good starting point for useful robotics in the real world.

Watch a robot navigate the iGibson virtual environment.

.jpg&w=256&q=80)

.jpg&w=1920&q=100)