When Artificial Agents Lie, Defame, and Defraud, Who Is to Blame?

Who is responsible when an AI engages in illegal linguistic conduct? | iStock/Pitiphothivichit

The movie Robot and Frank imagines a near future in which robots can be purchased to act as in-home caregivers and companions. Frank’s son buys him a robot, and Frank quickly realizes he can enlist its help in committing cat burglaries. The robot begins to show creativity and initiative in these criminal acts, and Frank is suffering from dementia. Who is ultimately responsible for these violations of the law?

Experts in robotics and artificial intelligence will have to suspend belief in order to enjoy Robot and Frank – the robot has capabilities that will continue to be purely science fiction for some time. But continuing advances in the field of artificial intelligence make it worth considering a provocative question that may become more practically relevant in the future: How would we, as individuals and as a society, react to an artificial agent that participated in the commission of some civil or criminal offense? The artificial agents of today would not make good cat burglars, but they have the gift of gab, and so it is only a matter of time before they are accused of committing offenses involving language in some way: libel, slander, defamation, bribery, coercion, and so forth.

Who should be held responsible when artificial agents engage in linguistic conduct that is legally problematic? The law already describes some ways in which owners and distributors of artificial agents can be held liable for their misuse, but what will happen when these agents have adapted to their environments in ways that make them unique? No one will be able to fully predict or understand their behaviors.

We suspect that, in such situations, society may sometimes want to hold the agents themselves responsible for their actions. This has profound implications for law, technology, and individual users.

Adaptive Agents and Personal Responsibility

In Robot and Frank, the robot becomes a unique agent very quickly, as it learns about Frank and adapts to his behaviors and preferences. By contrast, present-day agents – whether constituted purely as software or as physical robots – are mostly generic, all-purpose entities that behave the same way with all users, often to our frustration. However, this is changing rapidly. The agents of the very near future will be increasingly adaptive. These new agents will learn about us and calibrate their own behaviors in response. You have seen glimpses of this if you have trained a speech recognizer to help you with dictation, or programmed a virtual assistant to recognize the ways that you refer to the things and people in your life. This is just the beginning. Developments in AI that are happening right now are paving the way for agents that flexibly adapt to our language, moods, needs, and habits of thought without any special effort on our part.

Read related story: How Large Language Models Will Transform Science and Society

Consider the potential consequences. The more the artificial agents in your life adapt to you, the more they will become unique – a highly complex blend of their original design, the specific experiences they have had with you, and the ways in which they have adapted to those experiences. In place of the perennial question “Nature or nurture?” we will ask “Design or experience?” when we observe them doing and saying surprising things, and even coercing us to do surprising things. When these actions are felt to have a negative impact, our legal system will be compelled to ask “Who is responsible?” – a question that might have no clear answer.

Unexplainable Behaviors Good and Bad

To see why such questions will be so challenging, it is worth dwelling on the fundamental difficulty of knowing and predicting fully how these systems operate. As the saying goes, they are “black boxes.” By comparison, previous generations of artificial agents were easy to interpret, since they were essentially giant sets of “if/then” statements. If a system misbehaved, we had a hope of finding the pieces of code that caused the misbehavior. However, systems designed in this way were never able to grapple with the complexity of the world, making them frustratingly inflexible and limited.

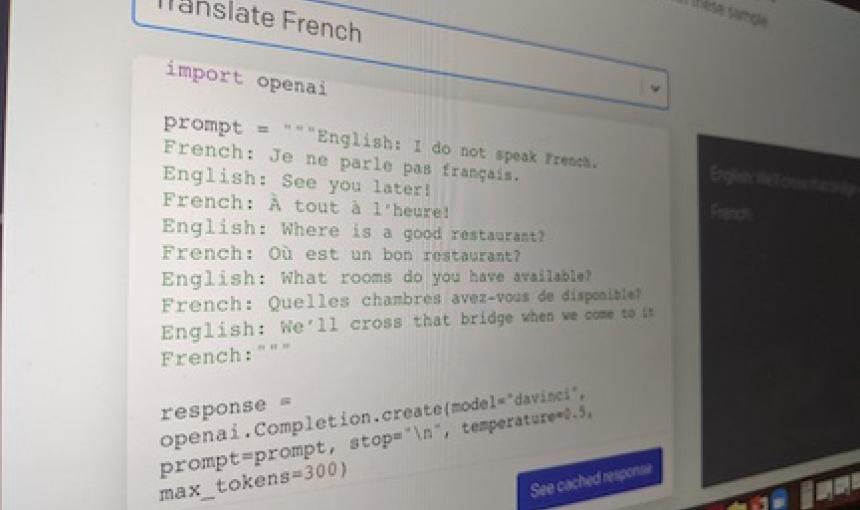

Current systems are the product of data-driven training processes: They learn to extract patterns from records of prior experiences, and then to apply that capability in new settings. There is no cascade of interpretable “if/then” statements. When we peer inside them, we see mostly enormous matrices of numerical values representing the specific ways that the system has learned to weight the data points it was trained on. This change in how we design and implement these systems is central to their recent successes.

So we give up analytic control and hope that this generalization process leads to behaviors that we recognize as good. For the most part, this has led to lots of positive outcomes: flexible touch- and voice-based interfaces that improve accessibility, usable and fast translation between dozens of languages, improved web search, and so forth. But it means that we generally can’t trace the good things we see to specific choices made by the designer or specific experiences that the system had. Progress is being made on making these systems less opaque, which will allow us to trust them more, but it’s a safe bet that there will always be behaviors whose origins are unknowable – or, at any rate, not any more knowable than the behaviors of other humans.

Tracing Responsibility for an Act of Defamation

As users of these systems, we can mostly ignore these technical details and get on with our AI-assisted lives. But imagine that you’re the proud owner/companion of an artificial agent that has lived with you for years and learned a lot about you as a person. One evening, it overhears you complaining about a coworker, and it decides – as your ally – to log onto Twitter for you and attack your coworker with vicious lies that damage your coworker’s professional reputation in a lasting way. Who is responsible for this terrible and unwanted event?

Your coworker might seek damages from you, as the device’s owner and the one who authorized it to tweet. You might look to the device’s manufacturer, arguing that it was designed in a way that made it dangerous. Both of you might find experts who can attest to how such behaviors could arise and, in turn, have theories about who is to blame. The truth of the matter often is likely to be quite unclear. “Design or experience?” – yes, almost certainly both, to some enormously opaque degree!

The risks inherent in this scenario are not limited to defamation. Your artificial agent might have the potential to commit all sorts of wrongs involving language. It could write to the CEO of a business you compete with and try to engineer a price-fixing scheme, or make claims that would count as manipulation of financial markets, or engage in dialogue that would amount to social engineering to facilitate identity theft, and so on. The list of language-based offenses that are just on the edge of possible for present-day agents is very long, and it will only grow longer as these agents become more central to our lives. As a responsible steward of your agent, you could try to counsel it to behave in more ethical ways, but no one will be able to offer guarantees that this will succeed.

The Role of Intentionality

The doctrines defining many legal wrongs like libel and defamation tend to presuppose that liability requires some degree of intentionality – the capacity of an entity to form and act on intentions. There is a prominent strand of thinking, represented in a recent influential paper by Emily Bender and Alexander Koller, positing that at least many artificial agents cannot be intentional agents by their very nature: They can’t mean anything with their linguistic outputs, because they are just mimicking their training data with no understanding of their inputs or their outputs. Does it follow from this position that artificial agents cannot be held responsible for the ill effects of their linguistic output? It seems very unlikely that we will resolve this issue so neatly, given the law’s take on other non-human actors.

Animals are protected by laws against cruel treatment, but also face consequences for their repeated undesirable behavior – despite the protestations of their owners that they’ll redouble their vigilance to guard the world against their pet’s transgressions.

Our legal system also assigns artificial personhood to corporations. Even informal associations devoid of corporate charters can be held responsible for certain harms. As with the artificial agent in our defamation scenario, it is possible but often hard to trace the actions of a corporation to the intentional acts of any specific person or group of people. In such situations, we can hold the corporation itself liable, and we expect corporations to have resources and mechanisms to help address the wrongs that they cause.

As these examples illustrate, the legal system has a variety of tools and doctrines forcing parties to internalize the costs associated with risks they create, and to allocate responsibility for civil or criminal harms. The menu of options includes imposition of liability on humans or corporations that fail to exercise reasonable care when making a product or service available, regulatory approvals (as with the oversight of medical devices by the Food and Drug Administration), and even taxes or insurance requirements associated with particular activities (such as owning a car). In our imagined defamation-by-AI case, the concept of vicarious liability might supply relevant precedents, since it establishes that a supervisor can bear responsibility for the misconduct of a subordinate in a professional setting.

We are not suggesting that treating artificial agents as intentional agents who are responsible for certain harms is obviously desirable, easy to achieve, or without costs. But we’re not ready to reject this possibility. Our legal system both protects and regulates non-human actors. These regulations likely reflect society’s implicit judgment (though imperfectly implemented) that such regulation can be a means through which we can advance common goals – even if the surrounding philosophical questions remain unresolved.

When faced with a problematic act by an artificial agent, society may conceivably find it most useful for the law to treat an artificial agent as an entity — that is, like a corporation or a wayward, dangerous animal — subject to certain constraints or penalties if the agent has become sufficiently unique. Before you authorize your artificial friends to speak publicly, you might be required to purchase an insurance policy. As with the regulation of cars in many states, insurance may become an important, routine piece of the arrangements we make when we use agents. Over time, insurers’ decisions about the attributes of agents may become a crucial lever shaping what artificial agents do and how they do it.

A Call for Cross-Discipline Conversation

The artificial agents of the near future will be like humans in at least one respect: They will be unique products of their design and their experiences, and they will behave in ways we cannot fully anticipate. Who will bear responsibility for the legal offenses they inevitably commit? Our society – and therefore, our policymakers and our legal system – will confront this question sooner than we might think. We have relevant precedents and analogies to draw on, and these will help, but they might also bring into sharp relief just how new the challenges posed by AI actually are. The answers will call for conversations that cut across disciplines, and may lead us to counter-intuitive conclusions. As technologists, we are eager to participate in these conversations and urge our colleagues to do the same.

Authors: Christopher Potts is professor and chair of the Stanford Humanities & Sciences Department of Linguistics and a professor, by courtesy, of computer science. Mariano-Florentino Cuéllar is the Herman Phleger Visiting Professor of Law and serves on the Supreme Court of California. Judith Degen is an assistant professor of linguistics. Michael C. Frank is the David and Lucile Packard Foundation Professor in Human Biology and an associate professor of psychology and, by courtesy, of linguistics. Noah D. Goodman is an associate professor of psychology and of computer science and, by courtesy, of linguistics. Thomas Icard is an assistant professor of philosophy and, by courtesy, of computer science. Dorsa Sadigh is an assistant professor of computer science and of electrical engineering.

The authors are the Principal Investigators on the Stanford HAI Hoffman–Yee project “Towards grounded, adaptive communication agents.” Learn more about our Hoffman-Yee grant winners here.