Was this written by a human or AI?

New research shows we can only accurately identify AI writers about 50% of the time. Scholars explain why (and suggest solutions).

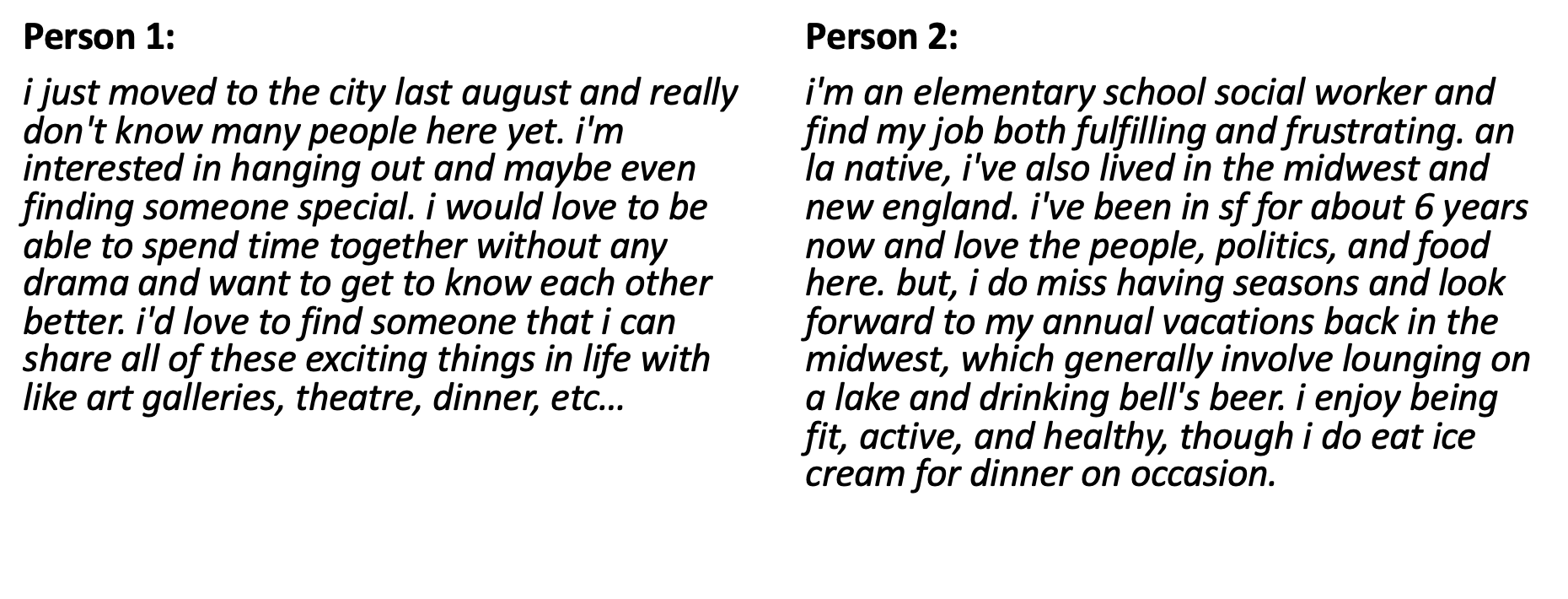

Picture this. You’re browsing on OkCupid when you come across two profiles that catch your eye. The photos are genuine and attractive to you, and the interests described match your own. Their personal blurbs even mention outings that you enjoy:

All things being equal, how might you decide between the two profiles? Not sure? Well, what if you were told that one of the blurbs - Person 1 - was generated by AI?

AI-generated text is increasingly making its way into our daily lives. Auto-complete in emails and ChatGPT-generated content are becoming mainstream, leaving humans vulnerable to deception and misinformation. Even in contexts where we expect to be conversing with another human - like online dating - the use of AI-generated text is growing. A survey from McAfee indicates that 31% of adults plan to or are already using AI in their dating profiles.

What are the implications and risks of using AI-generated text, especially in online dating, hospitality, and professional situations, areas where the way we represent ourselves is critically important to how we are perceived?

“Do I want to hire this person? Do I want to date this person? Do I want to stay in this person’s home? These are things that are deeply personal and that we do pretty regularly,” says Jeff Hancock, professor of communication at Stanford School of Humanities and Sciences, founding director of Stanford’s Social Media Lab, and a Stanford Institute for Human-Centered AI faculty member. Hancock and his collaborators set out to explore this problem space by looking at how successful we are at differentiating between human and AI-generated text on OKCupid, AirBNB, and Guru.com.

What Hancock and his team learned was eye-opening: participants in the study could only distinguish between human or AI text with 50-52% accuracy, about the same random chance as a coin flip.

The real concern, according to Hancock, is that we can create AI “that comes across as more human than human, because we can optimize the AI’s language to take advantage of the kind of assumptions that humans have. That’s worrisome because it creates a risk that these machines can pose as more human than us,”with a potential to deceive.

Low Accuracy, High Agreement

An expert in the field of deception detection, Hancock wanted to use his knowledge in that area to address AI-generated text. “One thing we already knew is that people are generally bad at detecting deception because we are trust-default. For this research, we were curious, what happens when we take this idea of deception detection and apply it to generative-AI, to see if there are parallels with other deception and trust literature?”

Read the full study, Human Heuristics for AI-generated Language are Flawed

After presenting participants with text samples across the three social media platforms, Hancock and his collaborators discovered that although we are not successful at distinguishing between AI and human-generated text, we aren’t entirely random either.

We all rely on similar - albeit similarly incorrect - assumptions based on reasonable intuition and shared language cues. In other words, we often get the wrong answer — human or AI — but for reasons we agree on.

For example, high grammatical correctness and the use of first-person pronouns were often attributed, incorrectly, to human-generated text. Referencing family life and using informal, conversational language were also, incorrectly, attributed to human-generated text. “We were surprised by the core insight that my collaborator Maurice Jakesch had, that we were all relying on the same heuristics that were flawed in the same ways.”

Hancock and his team also observed no meaningful difference in accuracy rates by platform, meaning participants were equally flawed at evaluating text passages in online dating, professional, and hospitality contexts.

Risk For Misinformation

Because of these flawed heuristics, and because it’s becoming cheaper and easier to produce content, Hancock believes we’ll see more misinformation in the future. “The volume of AI-generated content could overtake human-generated content on the order of years, and that could really disrupt our information ecosystem. When that happens, the trust-default is undermined, and it can decrease trust in each other.”

So how can we become better at differentiating between AI and human-generated text? “We all have to be involved in the solution here,” says Hancock.

One idea the team proposed is giving AI a recognizable accent. “When you go to England, you can kind of tell where people are from, and even in the U.S., you can say if someone is from the East Coast, LA, or the Midwest. It doesn’t require any cognitive effort — you just know.” The accent could even be merged with a more technical solution, like AI watermarking. Hancock also suggests that in high-stakes scenarios where authentication is valuable, self-disclosing machines might become the norm.

Even so, Hancock acknowledges that we are years behind in teaching young people about the risks of social media. “This is just another thing they’re going to need to learn about, and so far are receiving zero education and training on it.”

Despite the current murkiness, Hancock remains optimistic. “Seeing the bold glamour filter [on TikTok] yesterday was a little bit shocking. But we often focus on the tech, and we ignore the fact that we humans are the ones using it and adapting to it. And I’m hopeful that we can come up with ways to control how this is done, create norms and even perhaps policies and laws that constrain the negative uses of it.”

Stanford HAI’s mission is to advance AI research, education, policy and practice to improve the human condition. Learn more