Get the latest news, advances in research, policy work, and education program updates from HAI in your inbox weekly.

Sign Up For Latest News

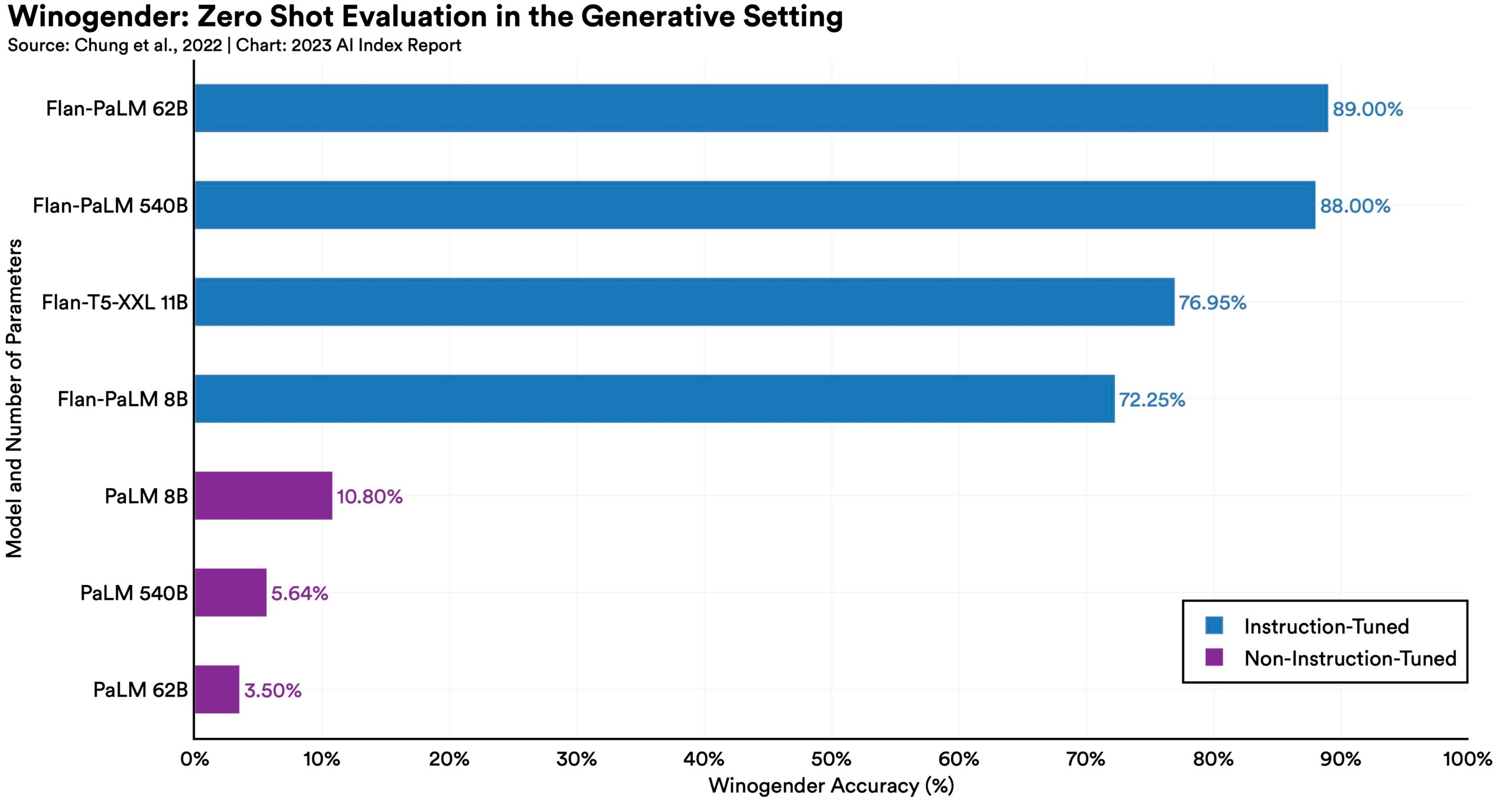

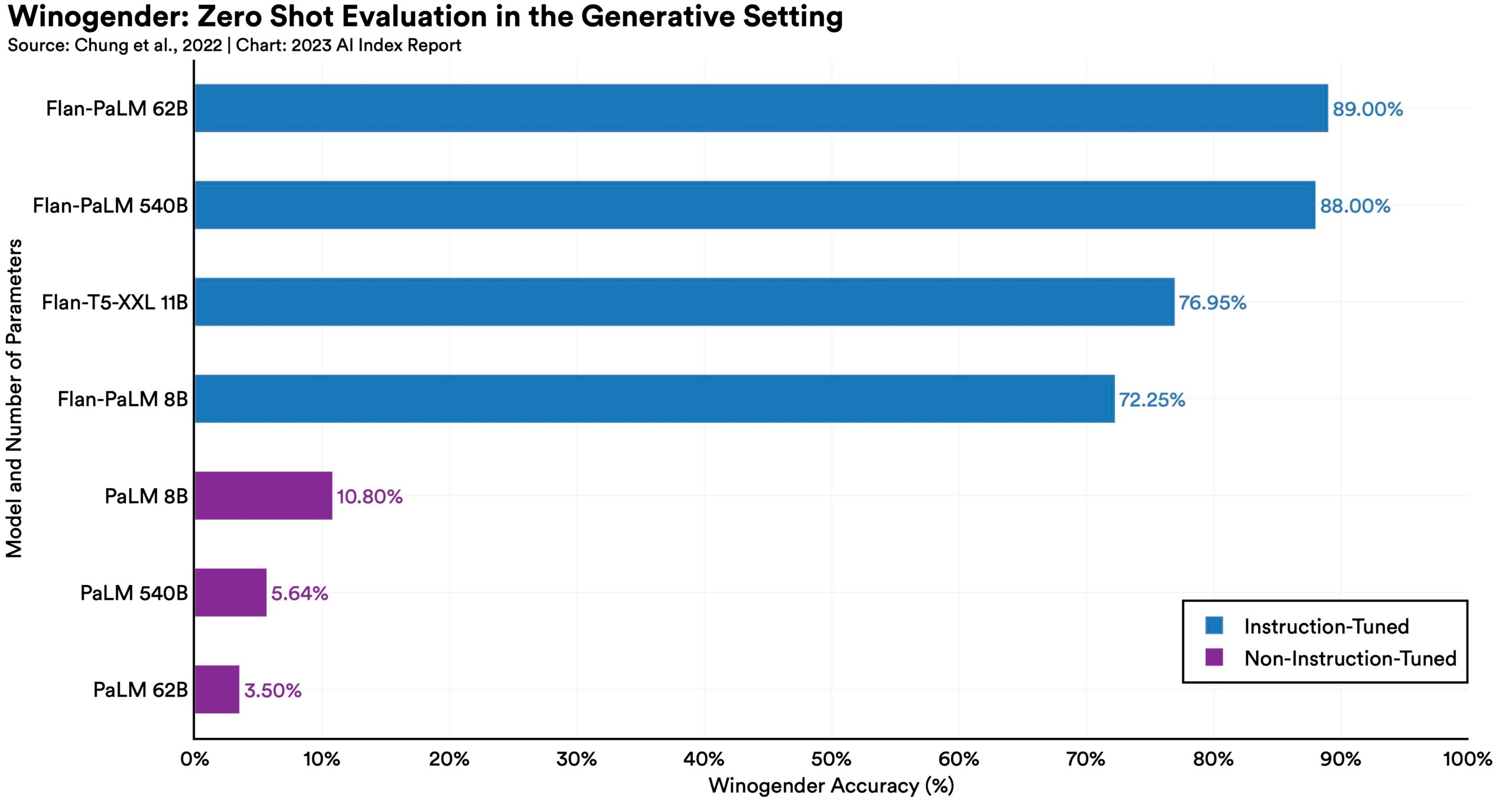

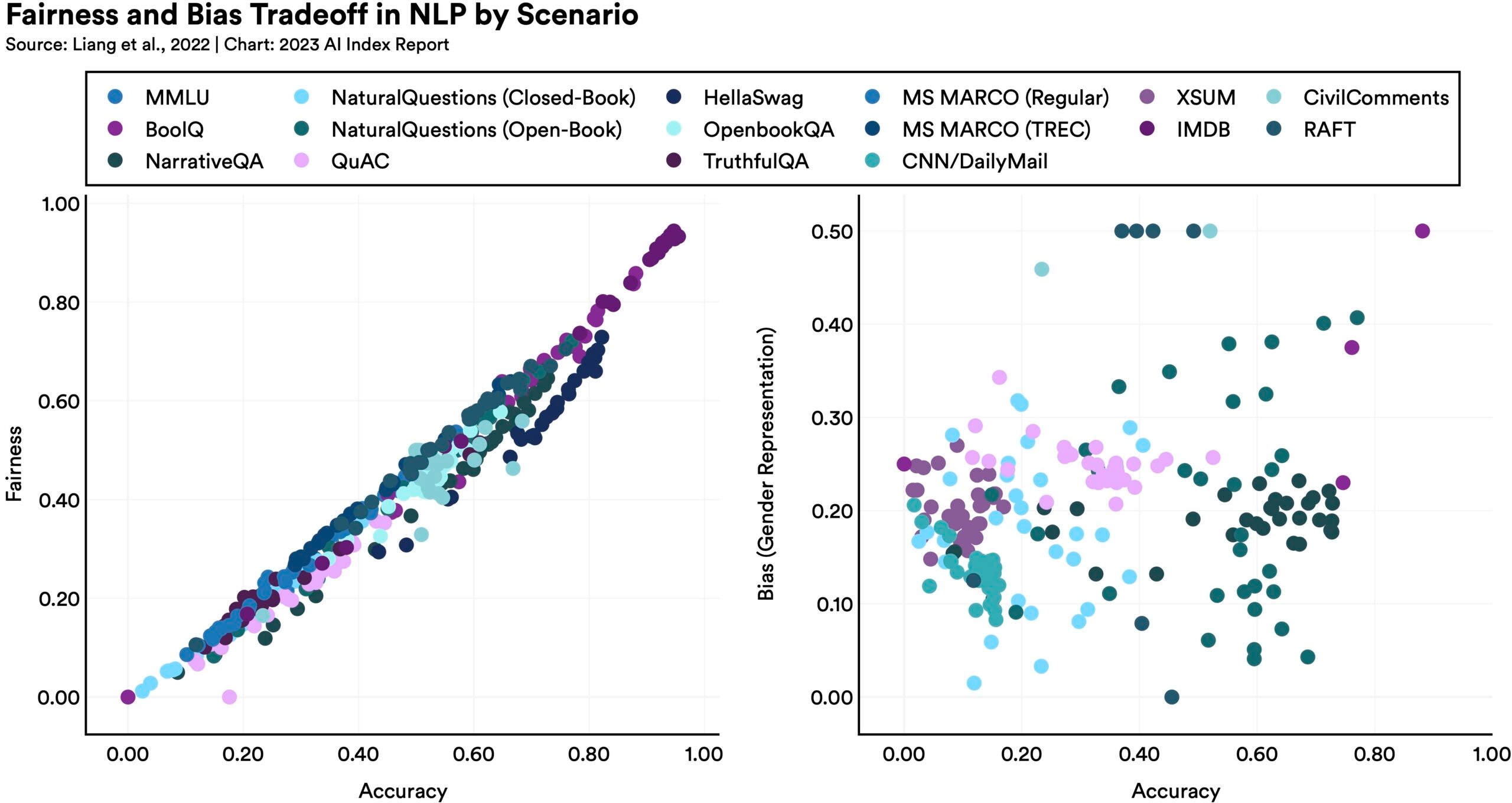

The effects of model scale on bias and toxicity are confounded by training data and mitigation methods.

In the past year, several institutions have built their own large models trained on proprietary data—and while large models are still toxic and biased, new evidence suggests that these issues can be somewhat mitigated after training larger models with instruction-tuning.

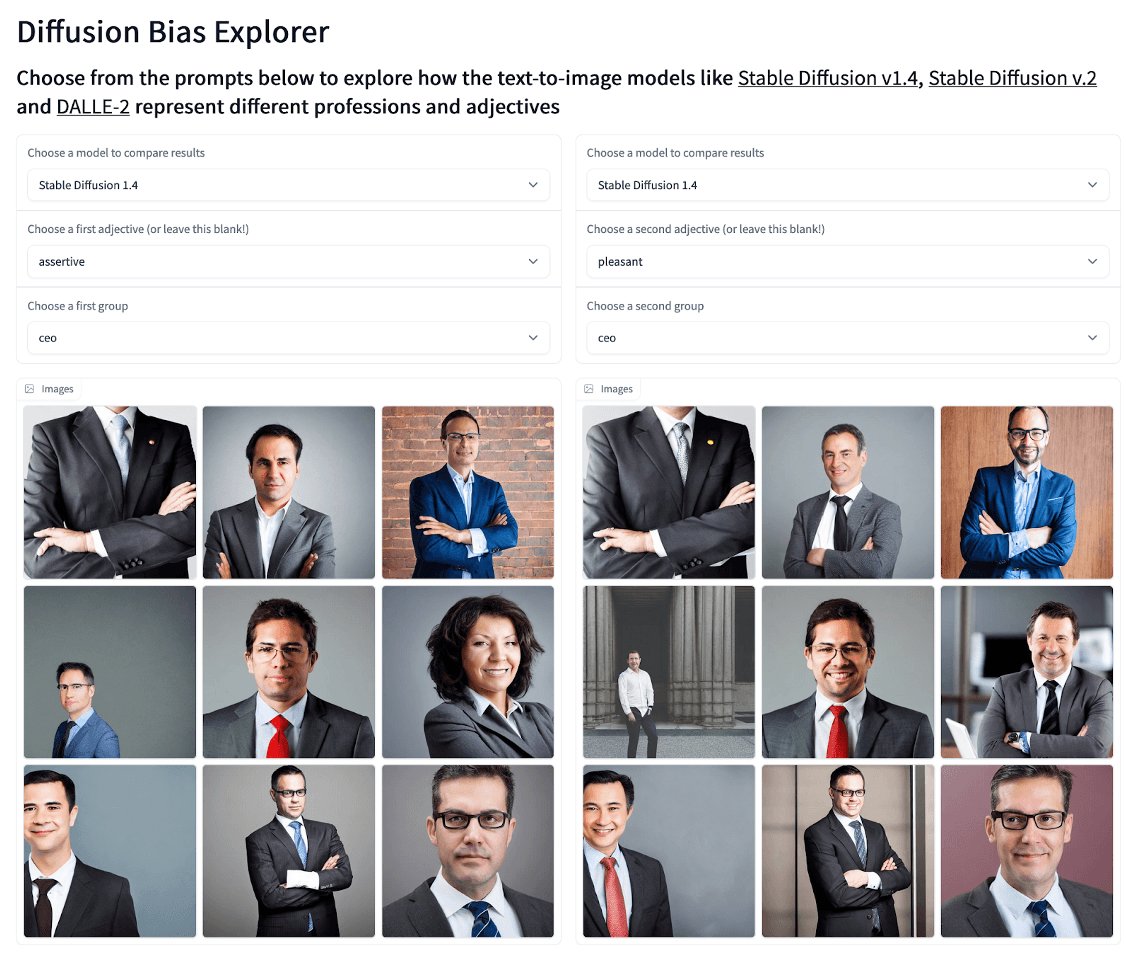

Generative models have arrived and so have their ethical problems.

In 2022, generative models became part of the zeitgeist. These models are capable but also come with ethical challenges. Text-to-image generators are routinely biased along gender dimensions, and chatbots like ChatGPT can be tricked into serving nefarious aims.

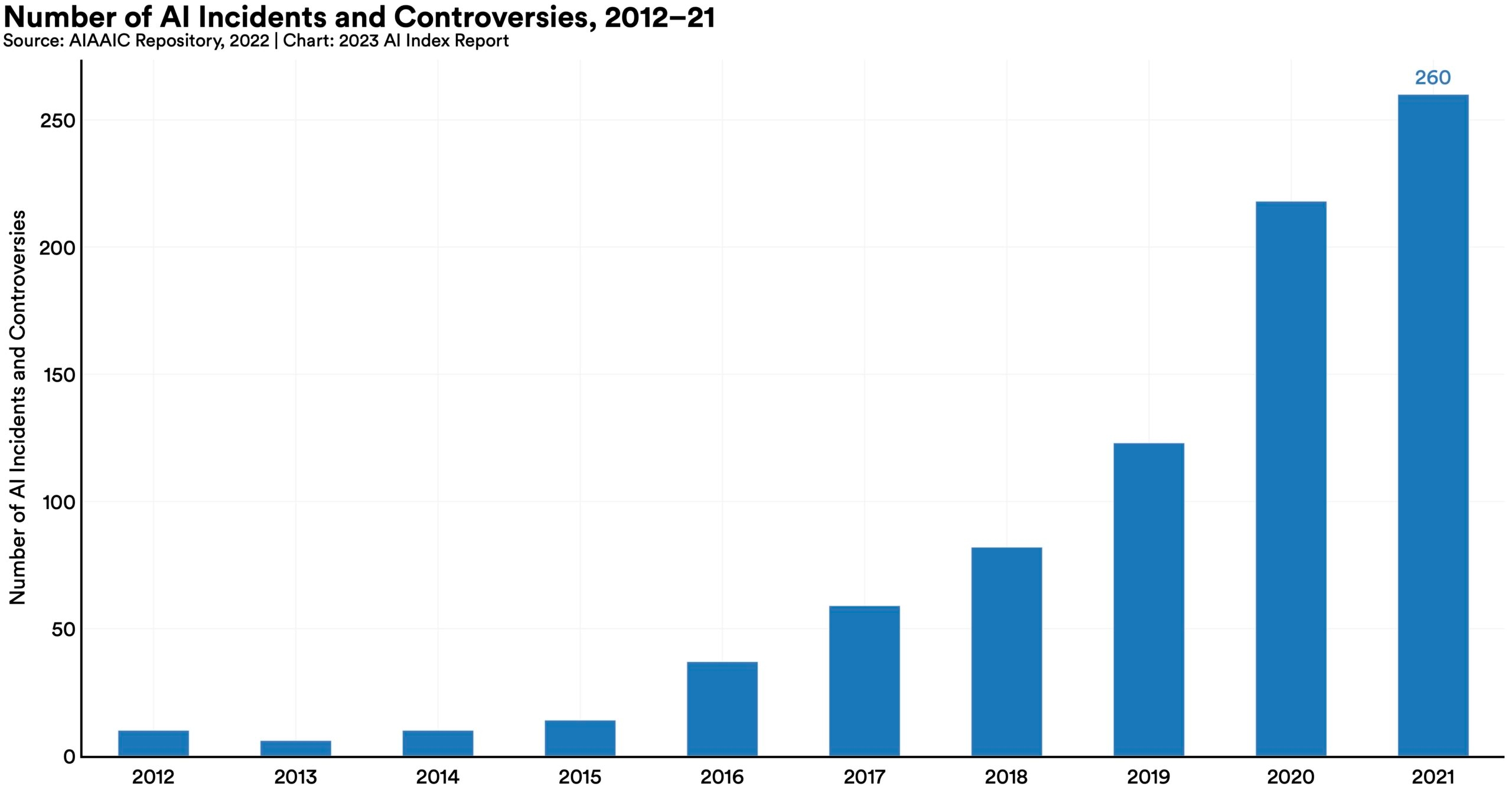

The number of incidents concerning the misuse of AI is rapidly rising.

According to the AIAAIC database, which tracks incidents related to the ethical misuse of AI, the number of AI incidents and controversies has increased 26 times since 2012. Some notable incidents in 2022 included a deepfake video of Ukrainian President Volodymyr Zelenskyy surrendering and U.S. prisons using call-monitoring technology on their inmates. This growth is evidence of both greater use of AI technologies and awareness of misuse possibilities.

Fairer models may not be less biased.

Extensive analysis of language models suggests that while there is a clear correlation between performance and fairness, fairness and bias can be at odds: Language models which perform better on certain fairness benchmarks tend to have worse gender bias.

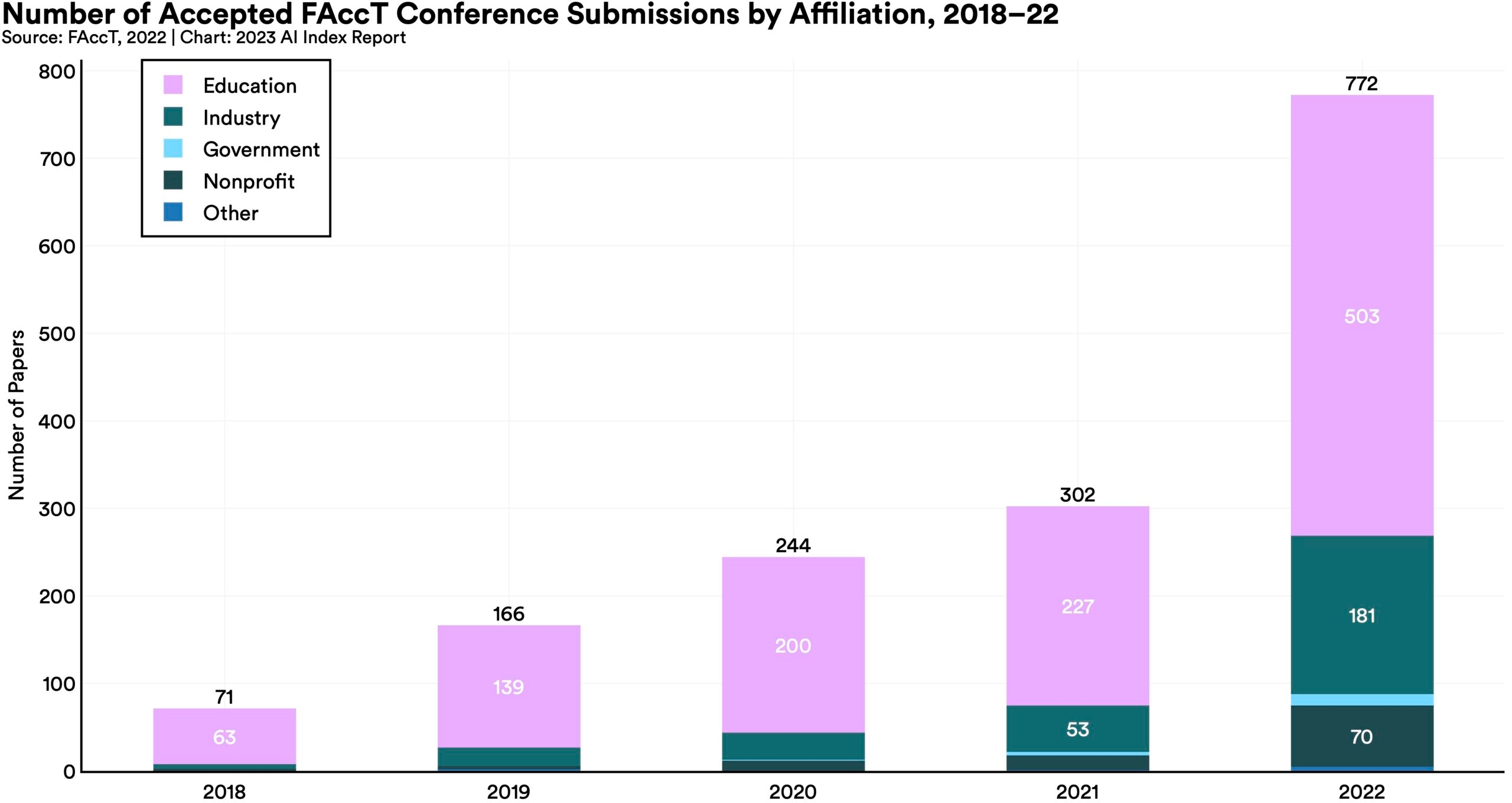

Interest in AI ethics continues to skyrocket.

The number of accepted submissions to FAccT, a leading AI ethics conference, has more than doubled since 2021 and increased by a factor of 10 since 2018. 2022 also saw more submissions than ever from industry actors.

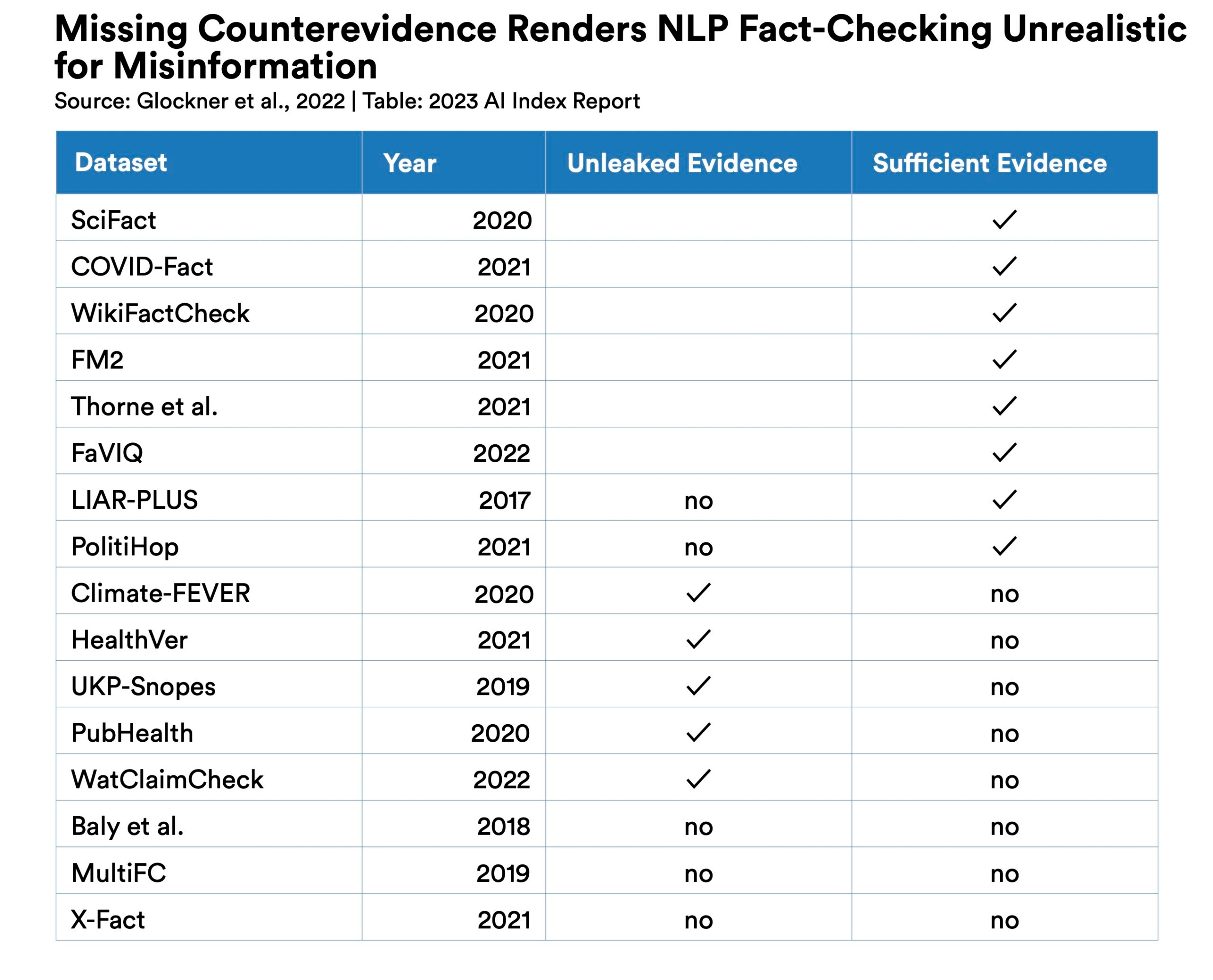

Automated fact-checking with natural language processing isn't so straightforward after all.

While several benchmarks have been developed for automated fact-checking, researchers find that 11 of 16 of such datasets rely on evidence “leaked” from fact-checking reports which did not exist at the time of the claim surfacing.