Get the latest news, advances in research, policy work, and education program updates from HAI in your inbox weekly.

Sign Up For Latest News

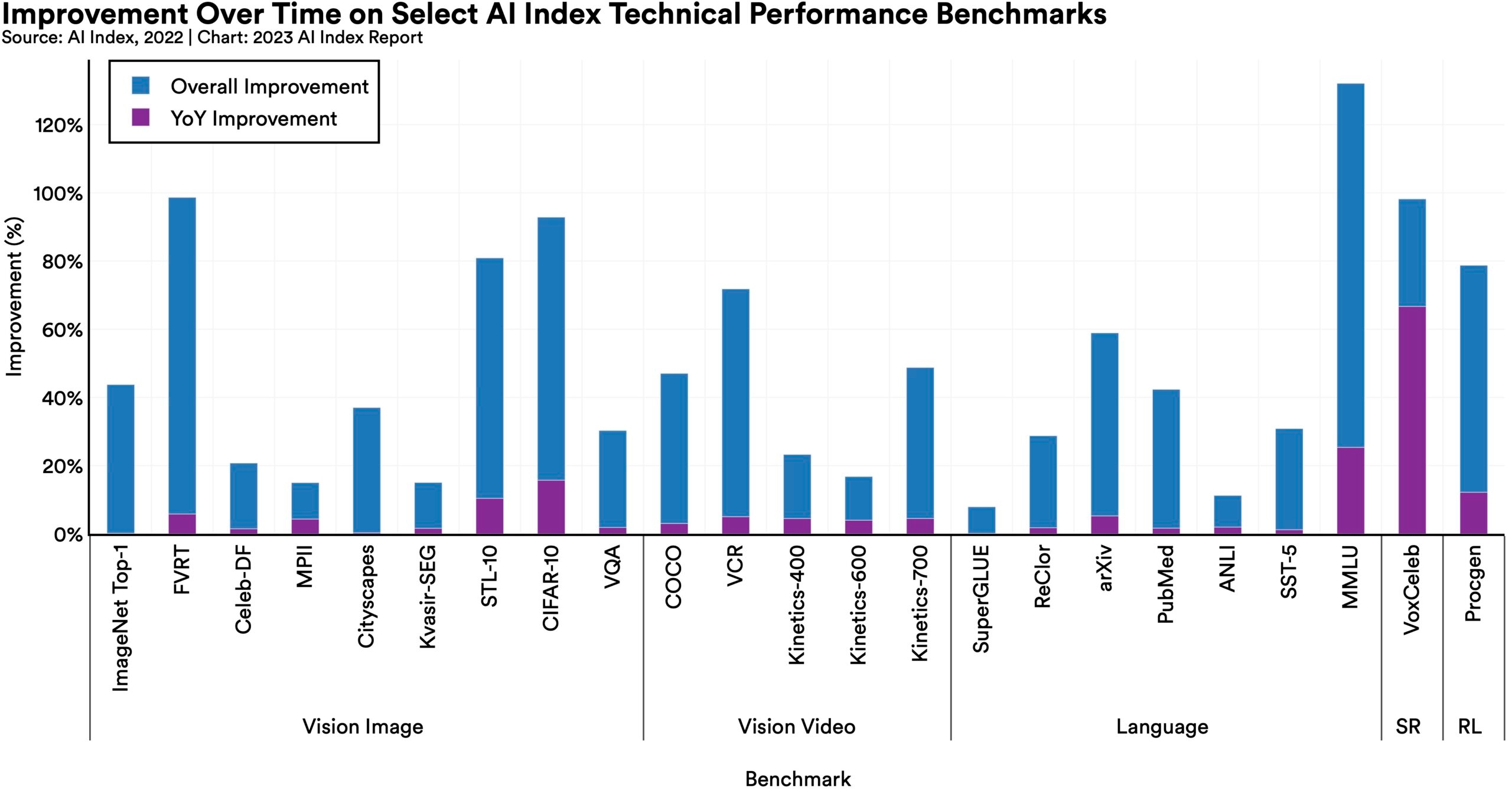

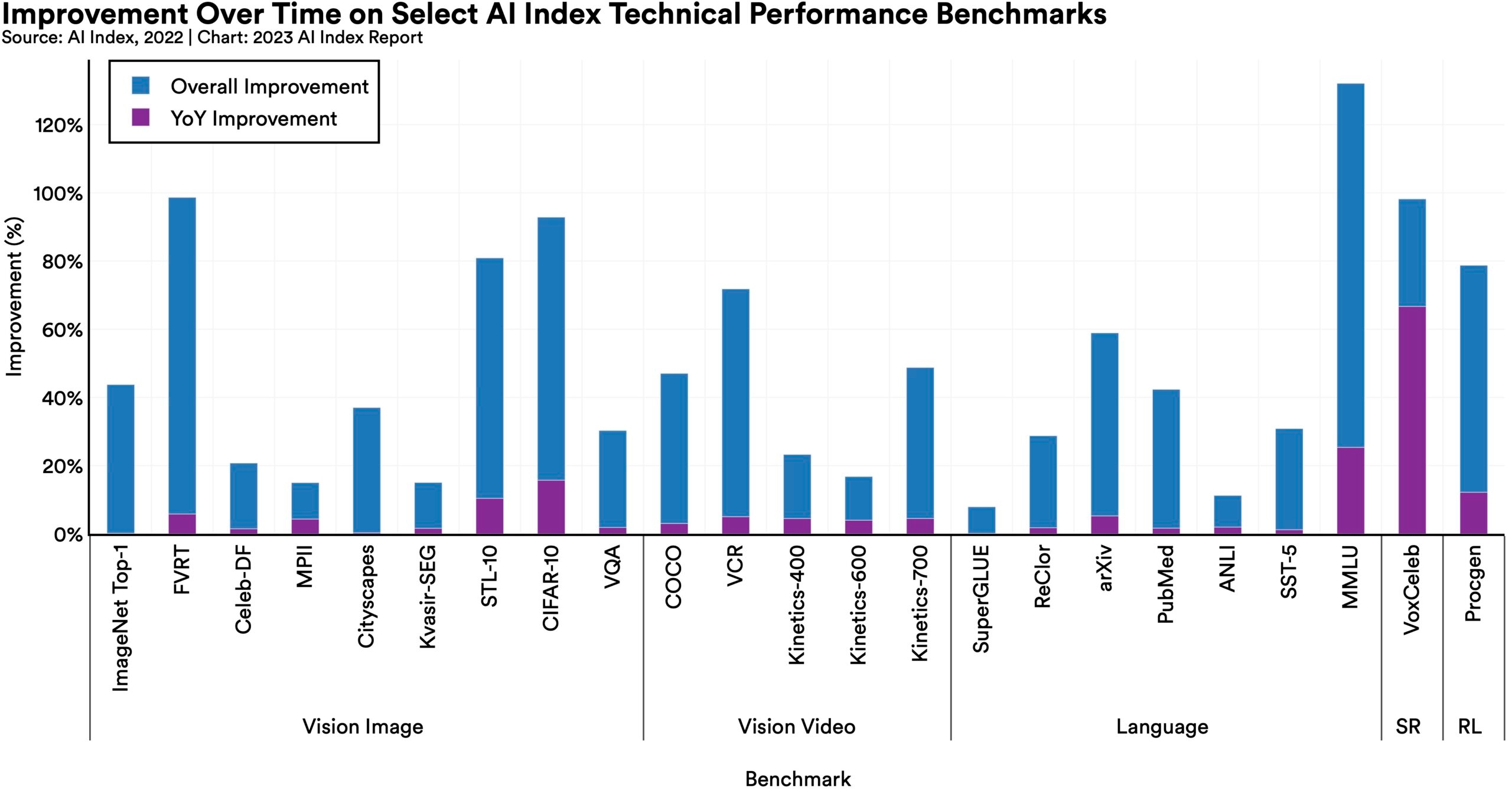

Performance saturation on traditional benchmarks.

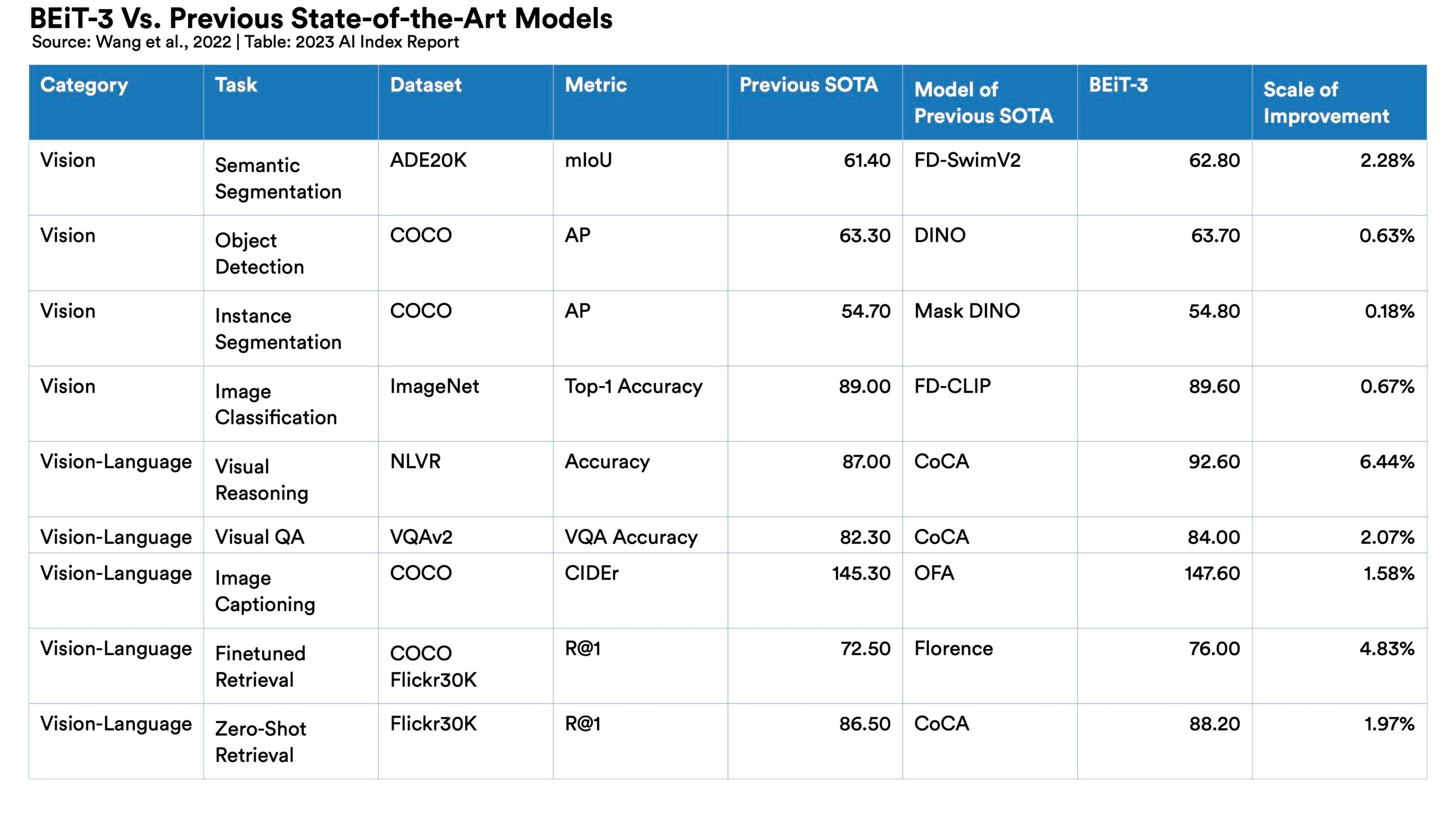

AI continued to post state-of-the-art results, but year-over-year improvement on many benchmarks continues to be marginal. Moreover, the speed at which benchmark saturation is being reached is increasing. However, new, more comprehensive benchmarking suites such as BIG-bench and HELM are being released.

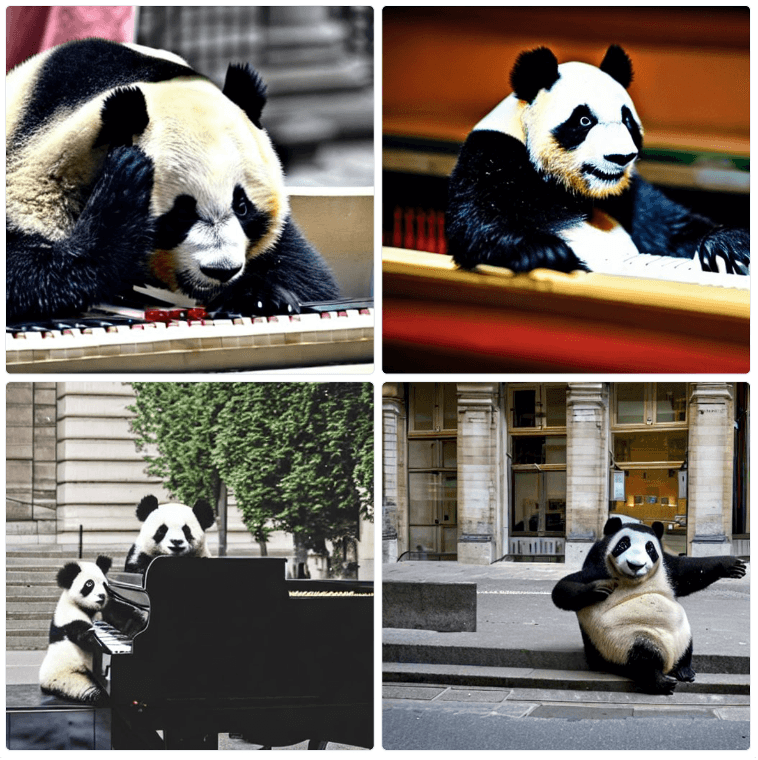

Generative AI breaks into the public consciousness.

2022 saw the release of text-to-image models like DALL-E 2 and Stable Diffusion, text-to-video systems like Make-A-Video, and chatbots like ChatGPT. Still, these systems can be prone to hallucination, confidently outputting incoherent or untrue responses, making it hard to rely on them for critical applications.

AI systems become more flexible.

Traditionally AI systems have performed well on narrow tasks but have struggled across broader tasks. Recently released models challenge that trend; BEiT-3, PaLI, and Gato, among others, are single AI systems increasingly capable of navigating multiple tasks (for example, vision, language).

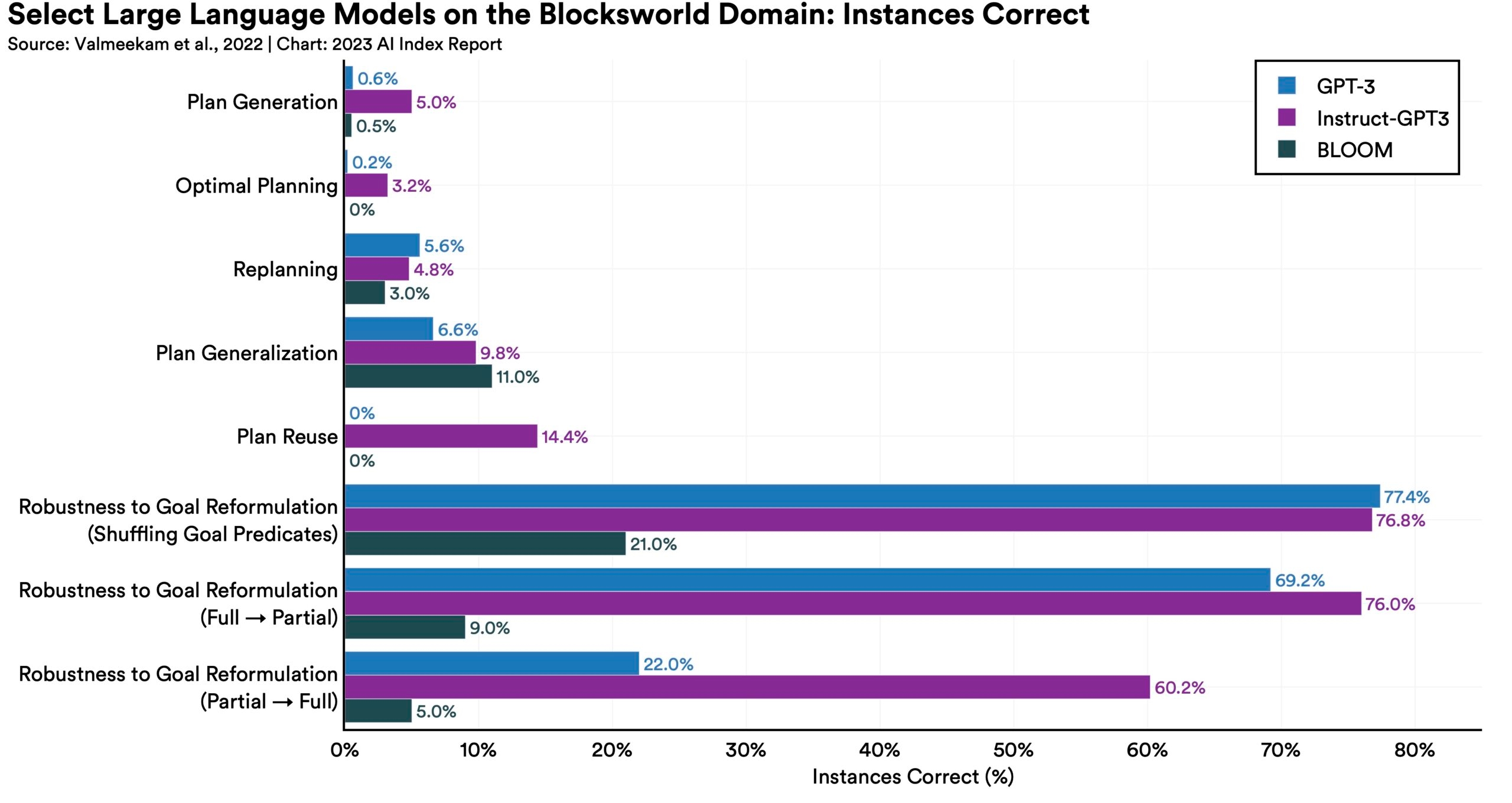

Capable language models still struggle with reasoning.

Language models continued to improve their generative capabilities, but new research suggests that they still struggle with complex planning tasks.

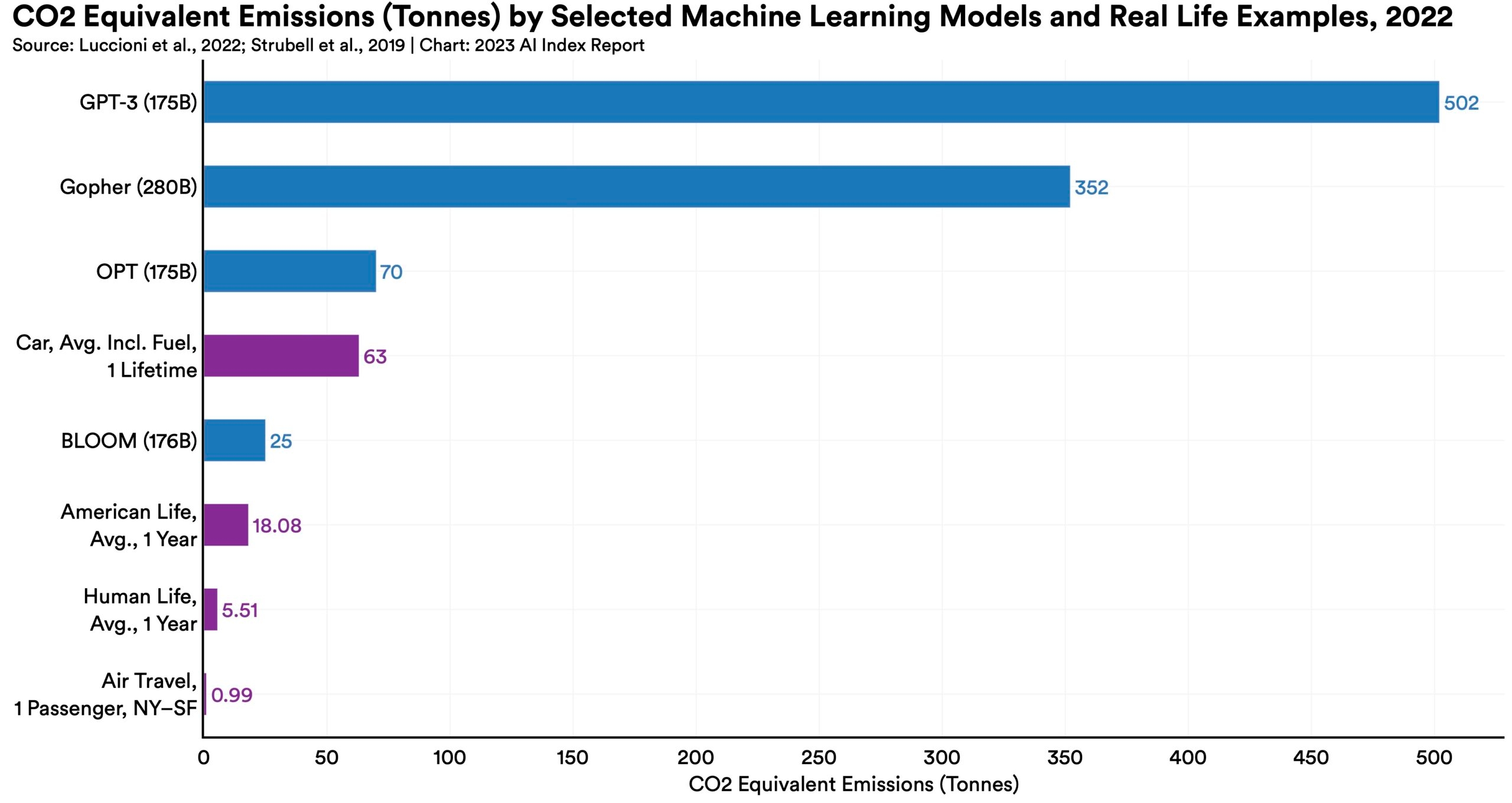

AI is both helping and harming the environment.

New research suggests that AI systems can have serious environmental impacts. According to Luccioni et al., 2022, BLOOM’s training run emitted 25 times more carbon than a single air traveler on a one-way trip from New York to San Francisco. Still, new reinforcement learning models like BCOOLER show that AI systems can be used to optimize energy usage.

The world's best new scientist...AI?

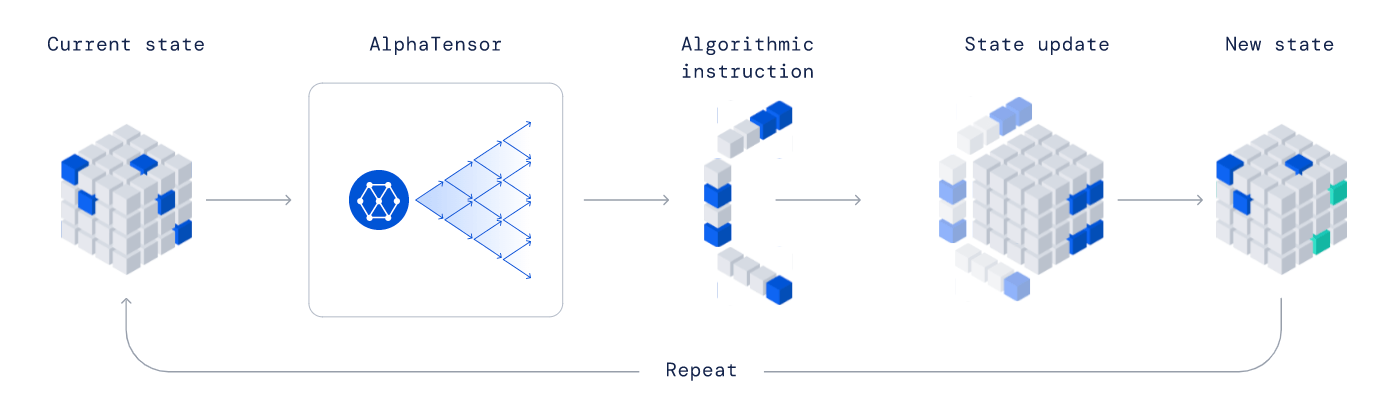

AI models are starting to rapidly accelerate scientific progress and in 2022 were used to aid hydrogen fusion, improve the efficiency of matrix manipulation, and generate new antibodies.

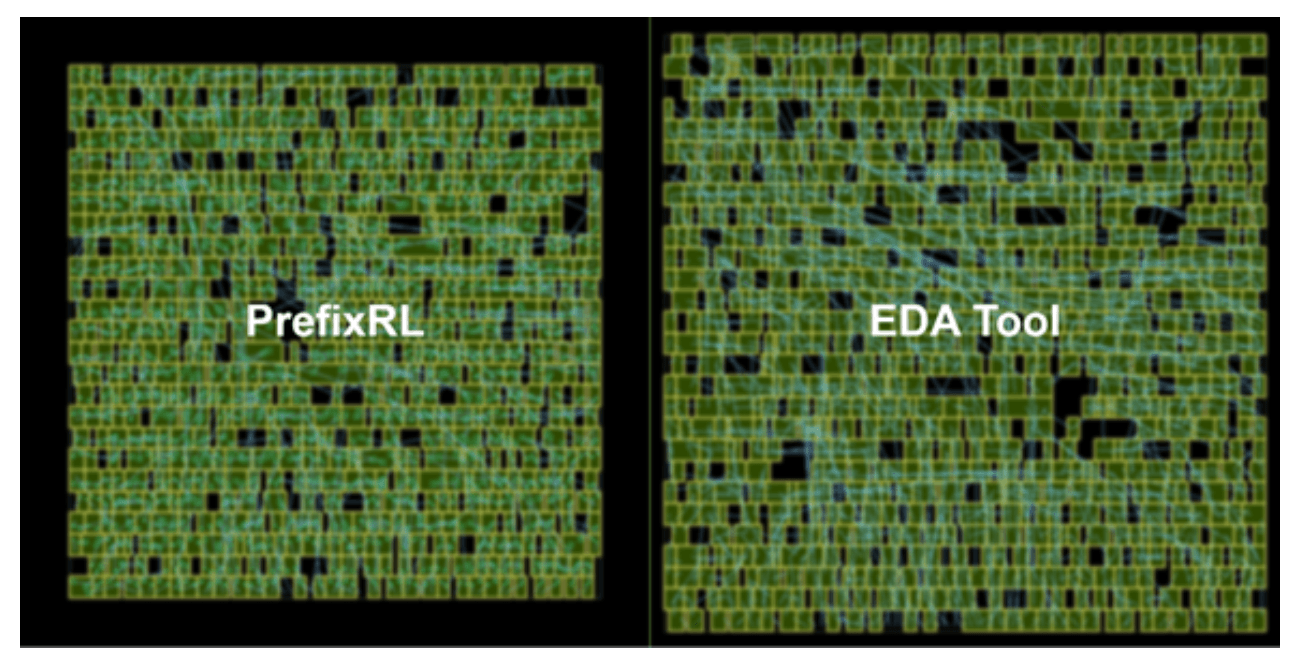

AI starts to build better AI.

Nvidia used an AI reinforcement learning agent to improve the design of the chips that power AI systems. Similarly, Google recently used one of its language models, PaLM, to suggest ways to improve the very same model. Self-improving AI learning will accelerate AI progress.