AI Overreliance Is a Problem. Are Explanations a Solution?

Stanford researchers show that shifting the cognitive costs and benefits of engaging with AI explanations could result in fewer erroneous decisions due to AI overreliance.

In theory, a human collaborating with an AI system should make better decisions than either working alone. But humans often accept an AI system’s recommended decision even when it is wrong – a conundrum called AI overreliance. This means people could be making erroneous decisions in important real-world contexts such as medical diagnosis or setting bail, says Helena Vasconcelos, a Stanford undergraduate student majoring in symbolic systems.

One theory: AI should explain its decisions. But in paper after paper, scholars find that explainable AI doesn’t reduce overreliance.

“It’s a result that doesn’t align with our intuition,” Vasconcelos says.

In a recent study, she, Ranjay Krishna of the University of Washington, and Stanford HAI affiliates Michael Bernstein, an associate professor of computer science, and Tobias Gerstenberg, an assistant professor of psychology, asked: Would AI explanations matter if it took less mental effort to scrutinize them? What if there was a greater benefit to doing so?

In experiments with online workers, Vasconcelos and her colleagues found that people are less likely to over-rely on an AI’s prediction when the accompanying AI explanations are simpler than the task itself and when the financial reward for a correct answer is greater.

Read the full study: Explanations Can Reduce Overreliance on AI Systems During Decision-Making

“We’re trying to target not only providing an explanation but also encouraging people to engage with the explanation,” Vasconcelos says. “And we get that by either making the explanation easier to understand or by raising the stakes.”

And because AI developers don’t have many explainability techniques that require substantially less work to verify than doing the task manually, she adds, “Maybe we need to build some.”

Toward Engagement with AI Explanations

As Vasconcelos and her colleagues examined the research finding that AI explanations don’t reduce AI overreliance, they noticed something: When the experiments involved easy tasks, such as assessing the sentiment behind a movie review (is it a rave or a pan?), explanations likely didn’t affect overreliance because people could just as easily do the task themselves. And when the experiments used hard tasks, the AI explanations were often just as complex to understand as the task itself.

For example, one prior experiment asked people to collaborate with an AI on a reading comprehension task for which the AI summarized a passage and then provided an explanation for the summary. The human collaborators could then check the AI’s work in one of two ways: by doing the reading comprehension task themselves, or by examining the AI summary and explanation. Vasconcelos noted that both options were equally challenging; it was no surprise, then, when humans accepted the AI’s decision rather than dig into the explanation.

Just as in human-to-human interactions, we’re more likely to cognitively engage with an explanation that’s simpler than the task itself, Vasconcelos says. “If my professor is doing something complicated and then explains it in a complicated way, it’s not that helpful. But if there is an easy-to-understand explanation, I’m more likely to scrutinize it to make sure it makes sense.”

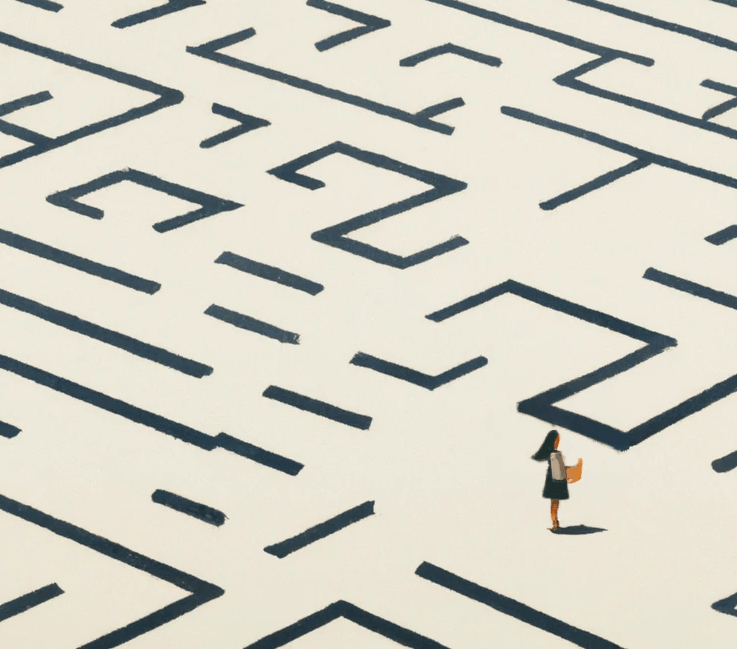

Mazes, Costs, Benefits & Utilities

To test their theory, Vasconcelos and her colleagues asked online crowdworkers (on a platform called Prolific) to collaborate with an AI to find the solution to a maze puzzle across three different levels of maze difficulty (easy, medium, and hard). The AI provided its solution (the correct exit point for solving the maze) and either no explanation or one of several different levels of explanation, including turn-by-turn directions (complex); an image of the maze with the AI’s solution highlighted in red (less complex); an image of the AI’s solution highlighted in red but the highlight stops if it hits a barrier (simpler); and an image with the path highlighted in red before it hits a barrier and in blue after (simplest).

The result: The turn-by-turn instructions did not reduce overreliance at any level of maze difficulty. Scrutinizing them was as complex as solving the maze on one’s own. And when the maze was easy to do without assistance, explanations – even highlights – had no effect on overreliance.

But overreliance did decline when workers were shown highlighted maze routes revealing that the AI’s solution crossed over a barrier. And the more intuitive the highlights (e.g., changing colors), the more overreliance decreased. This was especially true for the most difficult mazes.

The team concluded that if a task is hard and the explanation is simple, it does reduce overreliance. But explanations have no impact on overreliance if the task is hard and the explanation is complex, or if the task is easy and the explanation is also easy.

As an additional experiment, the team asked crowdworkers to choose between completing mazes of differing difficulty levels on their own for a specified amount of money or accepting less pay to have the help of an AI-recommended solution, either without an explanation, with the complex turn-by-turn directions, or with the simpler, highlighted red path. The results showed that workers value the AI’s help more when the task is harder, and value a simpler explanation more than a complex one.

The team also ran an experiment offering a monetary bonus for correct solutions to the maze. They found that as the benefit of engaging with the AI increases, overreliance decreases. “If there’s a benefit to be gained, people are going to put more effort toward avoiding mistakes,” Vasconcelos says.

While offering financial bonuses for making correct decisions might not work in the real world, it’s a useful proxy for other types of benefits such as intrinsic satisfaction, gamification, or other ways of making a task more engaging or interesting for people, Vasconcelos says. “We can motivate people with other mechanisms, using the same underlying principle of increasing benefit.”

For researchers who have been scratching their heads at the finding that explanations don’t reduce overreliance, these results should offer relief, Vasconcelos says. This work should also reinvigorate XAI researchers by giving them renewed motivation to improve and simplify AI explanations. “We’re proposing that when the task being done is hard, they should work on designing explanations that make errors of AI easy to spot,” she says.

Hear about this research in Vasconcelos’ own words, in this video with collaborators Krishna, Gerstenberg, Bernstein, second-year computer science PhD student Matthew Jörke, and Madeleine Grunde-McLaughlin at the University of Washington.

Stanford HAI’s mission is to advance AI research, education, policy and practice to improve the human condition. Learn more