How Much Research Is Being Written by Large Language Models?

New studies show a marked spike in LLM usage in academia, especially in computer science. What does this mean for researchers and reviewers?

In March of this year, a tweet about an academic paper went viral for all the wrong reasons. The introduction section of the paper, published in Elsevier’s Surfaces and Interfaces, began with this line: Certainly, here is a possible introduction for your topic.

Look familiar?

It should, if you are a user of ChatGPT and have applied its talents for the purpose of content generation. LLMs are being increasingly used to assist with writing tasks, but examples like this in academia are largely anecdotal and had not been quantified before now.

“While this is an egregious example,” says James Zou, associate professor of biomedical data science and, by courtesy, of computer science and of electrical engineering at Stanford, “in many cases, it’s less obvious, and that’s why we need to develop more granular and robust statistical methods to estimate the frequency and magnitude of LLM usage. At this particular moment, people want to know what content around us is written by AI. This is especially important in the context of research, for the papers we author and read and the reviews we get on our papers. That’s why we wanted to study how much of those have been written with the help of AI.”

In two papers looking at LLM use in scientific publishings, Zou and his team* found that 17.5% of computer science papers and 16.9% of peer review text had at least some content drafted by AI. The paper on LLM usage in peer reviews will be presented at the International Conference on Machine Learning.

Read Mapping the Increasing Use of LLMs in Scientific Papers and Monitoring AI-Modified Content at Scale: A Case Study on the Impact of ChatGPT on AI Conference Peer Reviews

Here Zou discusses the findings and implications of this work, which was supported through a Stanford HAI Hoffman Yee Research Grant.

How did you determine whether AI wrote sections of a paper or a review?

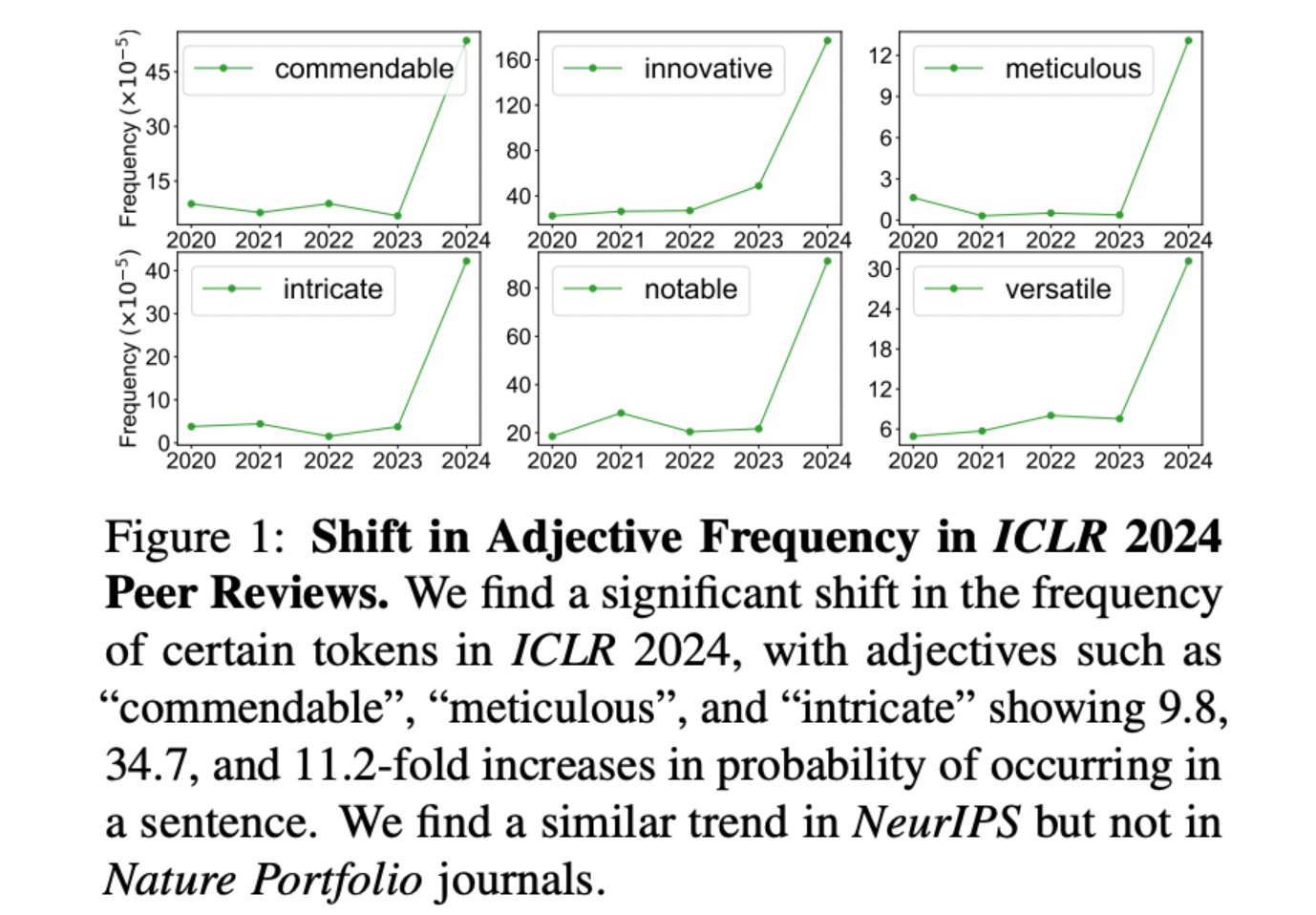

We first saw that there are these specific worlds – like commendable, innovative, meticulous, pivotal, intricate, realm, and showcasing – whose frequency in reviews sharply spiked, coinciding with the release of ChatGPT. Additionally, we know that these words are much more likely to be used by LLMs than by humans. The reason we know this is that we actually did an experiment where we took many papers, used LLMs to write reviews of them, and compared those reviews to reviews written by human reviewers on the same papers. Then we quantified which words are more likely to be used by LLMs vs. humans, and those are exactly the words listed. The fact that they are more likely to be used by an LLM and that they have also seen a sharp spike coinciding with the release of LLMs is strong evidence.

Some journals permit the use of LLMs in academic writing, as long as it’s noted, while others, including Science and the ICML conference, prohibit it. How are the ethics perceived in academia?

Some journals permit the use of LLMs in academic writing, as long as it’s noted, while others, including Science and the ICML conference, prohibit it. How are the ethics perceived in academia?

This is an important and timely topic because the policies of various journals are changing very quickly. For example, Science said in the beginning that they would not allow authors to use language models in their submissions, but they later changed their policy and said that people could use language models, but authors have to explicitly note where the language model is being used. All the journals are struggling with how to define this and what’s the right way going forward.

You observed an increase in usage of LLMs in academic writing, particularly in computer science papers (up to 17.5%). Math and Nature family papers, meanwhile, used AI text about 6.3% of the time. What do you think accounts for the discrepancy between these disciplines?

Artificial intelligence and computer science disciplines have seen an explosion in the number of papers submitted to conferences like ICLR and NeurIPS. And I think that’s really caused a strong burden, in many ways, to reviewers and to authors. So now it’s increasingly difficult to find qualified reviewers who have time to review all these papers. And some authors may feel more competition that they need to keep up and keep writing more and faster.

You analyzed close to a million papers on arXiv, bioRxiv, and Nature from January 2020 to February 2024. Do any of these journals include humanities papers or anything in the social sciences?

We mostly wanted to focus more on CS and engineering and biomedical areas and interdisciplinary areas, like Nature family journals, which also publish some social science papers. Availability mattered in this case. So, it’s relatively easy for us to get data from arXiv, bioRxiv, and Nature. A lot of AI conferences also make reviews publicly available. That’s not the case for humanities journals.

Did any results surprise you?

A few months after ChatGPT’s launch, we started to see a rapid, linear increase in the usage pattern in academic writing. This tells us how quickly these LLM technologies diffuse into the community and become adopted by researchers. The most surprising finding is the magnitude and speed of the increase in language model usage. Nearly a fifth of papers and peer review text use LLM modification. We also found that peer reviews submitted closer to the deadline and those less likely to engage with author rebuttal were more likely to use LLMs.

This suggests a couple of things. Perhaps some of these reviewers are not as engaged with reviewing these papers, and that’s why they are offloading some of the work to AI to help. This could be problematic if reviewers are not fully involved. As one of the pillars of the scientific process, it is still necessary to have human experts providing objective and rigorous evaluations. If this is being diluted, that’s not great for the scientific community.

What do your findings mean for the broader research community?

LLMs are transforming how we do research. It’s clear from our work that many papers we read are written with the help of LLMs. There needs to be more transparency, and people should state explicitly how LLMs are used and if they are used substantially. I don’t think it’s always a bad thing for people to use LLMs. In many areas, this can be very useful. For someone who is not a native English speaker, having the model polish their writing can be helpful. There are constructive ways for people to use LLMs in the research process; for example, in earlier stages of their draft. You could get useful feedback from a LLM in real time instead of waiting weeks or months to get external feedback.

But I think it’s still very important for the human researchers to be accountable for everything that is submitted and presented. They should be able to say, “Yes, I will stand behind the statements that are written in this paper.”

*Collaborators include: Weixin Liang, Yaohui Zhang, Zhengxuan Wu, Haley Lepp, Wenlong Ji, Xuandong Zhao, Hancheng Cao, Sheng Liu, Siyu He, Zhi Huang, Diyi Yang, Christopher Potts, Christopher D. Manning, Zachary Izzo, Yaohui Zhang, Lingjiao Chen, Haotian Ye, and Daniel A. McFarland.

Stanford HAI’s mission is to advance AI research, education, policy and practice to improve the human condition. Learn more.