To Cooperate Better, Robots Need To Think About Hidden Agendas

New approaches favoring unspoken strategies between collaborating machines could drive the next wave of advances in robotics.

Most artificial intelligence experts and technophiles understand that the smart future will someday include countless robots and autonomous vehicles that interact seamlessly among themselves and with people. But to avoid total mayhem, autonomous cars will have to influence and avoid other vehicles on the road, including those driven by humans. To do this, the robots will have to anticipate the behavior of these other mobile and free-thinking objects and to “co-adapt” for each other’s mutual benefit.

The incredible complexity of such a challenge is just one of the roadblocks that must be overcome, but new research from Stanford University indicates that the key may be to simplify rather than overthink.

Computer scientists Dorsa Sadigh and Chelsea Finn, faculty members at the Stanford Center for Human-Centered Artificial Intelligence (HAI), along with their team, Stanford computer science graduate student Annie Xie, Virginia Tech assistant professor Dylan Losey, and Stanford undergraduate Ryan Tolsma, have developed a novel way for robots to divine the next moves of other autonomous entities by thinking less about all the possible actions the other might take and more about what the other party is trying to accomplish—a concept dubbed “latent intent.”

One perspective, commonly referred to as Theory of Mind, has tended to approach the problem as if programming a robot to play chess. The overarching goal has been to try to calculate and plot out every potential move, and the subsequent consequences of each move the other might make, in order to settle upon what seems most likely or most rewarding. But this is far too probabilistically complex and computationally intensive to be done effectively.

“Instead,” Sadigh says, “what we discovered is that they actually need to focus on a much smaller and simpler set of strategies, not every imaginable option.”

By simplifying the process, Sadigh and Finn have found success where others only reached dead ends. They call the approach “LILI”—Learning and Influencing Latent Intent, which recently won the “Best Paper” award at the 2020 Conference on Robot Learning.

To seamlessly co-adapt with other machines, a robot must first anticipate the unspoken ways in which its collaborators’ behavior will change and then modify its own behaviors to subtly influence those hidden intentions. In the simplest terms, using LILI, the robot learns which move will come next and then anticipates how its own behavior will affect the other’s strategy—actively influencing the other agent in a process of give-and-take co-adaptation.

Every day, people achieve such tasks without a second thought. When driving or walking, they don’t secretly model every nuance of the thinking of others. They confine their thinking to understanding their collaborators’ high-level priorities—what is that person trying to accomplish? Sadigh and Finn call this the latent strategy.

By learning the other’s high-level strategy, the robot anticipates how the other will respond. What’s more, LILI then uses that high-level understanding to modify its own behavior to influence the future behavior of its correspondents. LILI works whether the two agents are cooperating with or competing against one another.

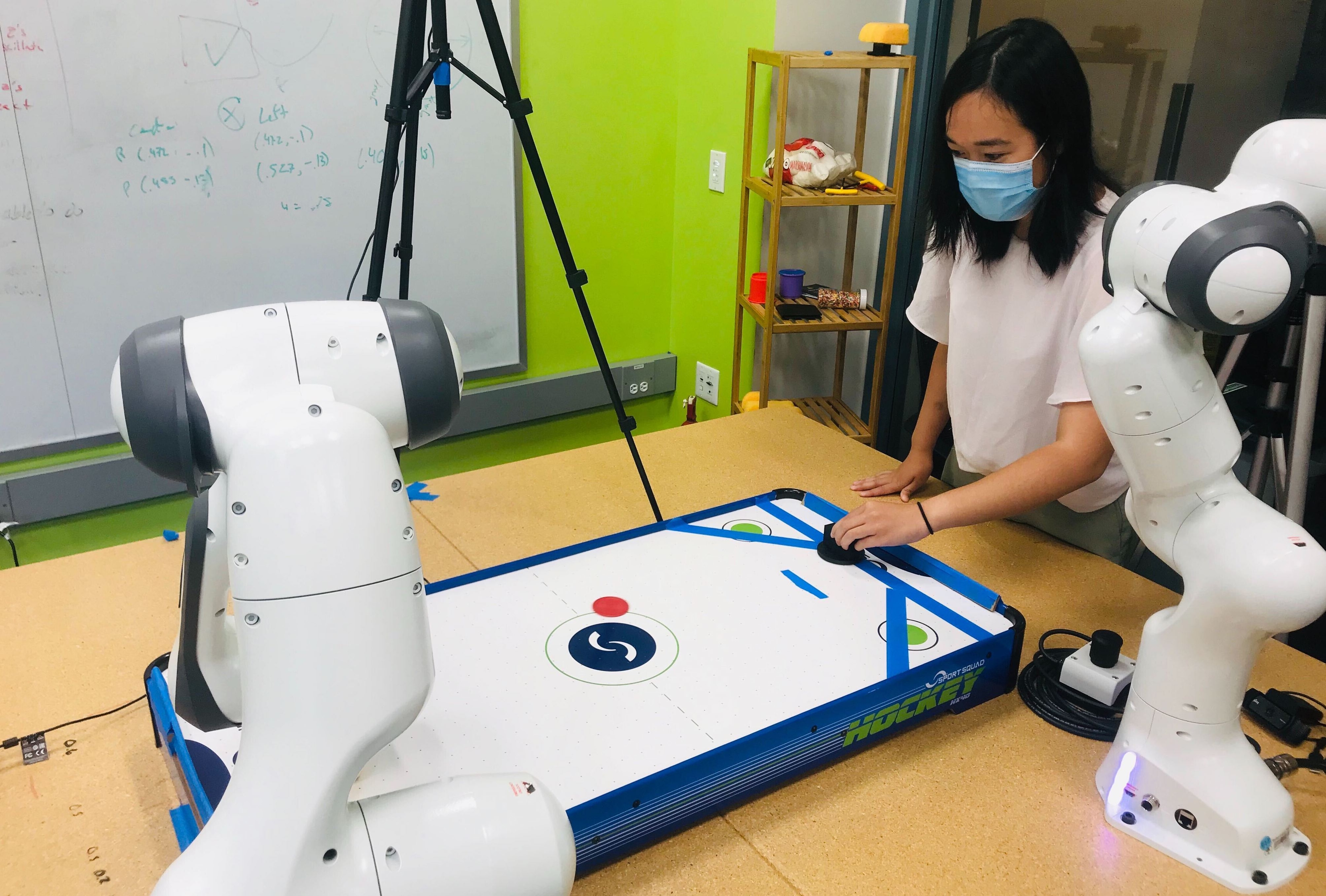

To demonstrate, Sadigh and team taught two robots to play air hockey. Over repeated interactions, the learning robot (the goalie) comes to understand its opponent’s policy is not only to score but to actively aim away from the spot where it was last blocked. The learning robot then uses that understanding to influence its opponent into aiming exactly where the goalie blocks best. Soon, the shooting robot is continually aiming exactly where the goalie robot is most likely to block the shot. Eventually, the goalie robot is able to block more than 90 percent of the shooter’s attempts.

When a human steps in as the shooter, the results are similar but not quite as impressive, which Sadigh chalks up to complexities and imperfections in the human’s movements. “It works very well with other robots and, overall, LILI performed better than state-of-the-art reinforcement learning algorithms now in use,” Sadigh says. “We’re working on that.”

Real-world applications of this work run the gamut from the aforementioned autonomous vehicles, which must continually anticipate and cooperate with other vehicles to avoid calamity, to assistive robots that help physically challenged individuals do everything from feeding themselves to getting dressed in the morning.

“I’m particularly excited about the possibility of robots operating in real, human environments. To get there, it will be important for robots to learn amidst people with intentions that change over time, and I believe this work is an important step in addressing that challenge,” Finn says. “That day is still out there in the future, but I think we are moving in the right direction.”

Stanford HAI's mission is to advance AI research, education, policy and practice to improve the human condition. Learn more.