Law, Policy, & AI Update: Github Lawsuit, China & Chips

The U.S. continues to ramp up export controls on chips (and maybe soon AI), the first lawsuit is filed for software piracy, and more.

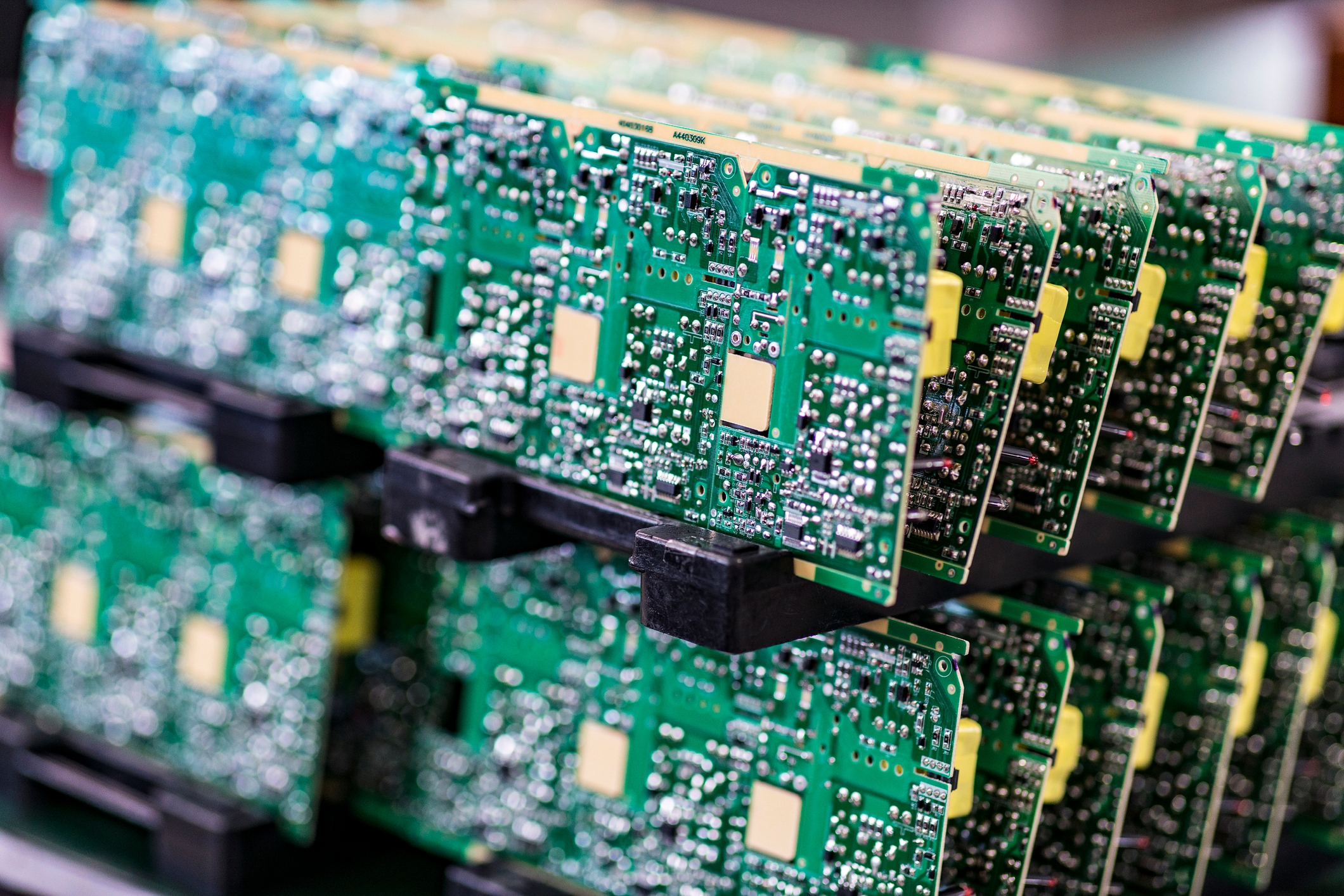

Welcome to the latest law, policy, and AI update. Two recent developments stick out. First, the United States continues to ramp up export controls to prevent China from expanding its chip manufacturing capabilities. The Department of Commerce Bureau of Industry and Security (“BIS”) noted that one of the goals of these export controls was to curtail the development of AI technologies that enable violations of human rights. If in the near future AI software is also targeted for export controls, as some commentators suggest may be the case, it is not clear how this will affect AI research. It is certainly worth keeping an eye on this space.

Second, intellectual property concerns about large pretrained models are coming to a boil, with the first lawsuit against Github’s CoPilot system officially filed in the Northern District of California. Nonetheless, companies seem confident in the legality of these systems and continue to integrate them into products. As lawsuits progress, it will be important to see how intellectual property law evolves to deal with foundation models.

Law

A group of attorneys has filed a class-action lawsuit against Github for its Copilot system. The system generates code suggestions for users. But it appears to occasionally generate verbatim code from existing codebases, even those under restrictive licenses. The lawsuit side-steps a fair use defense by focusing on a number of other claims including violations of DMCA § 1202 (for not properly including copyright information in generated outputs), CCPA (for privacy violations), contracts (for violating terms of use and license terms), and unlawful anti-competitive conduct. Some earlier discussion by TechDirt suggests that it may be difficult to win such a case, but there is a lot of uncertainty. Similar AI models for generated art artists are disrupting the creative work of human artists. But this hasn’t stopped companies from integrating AI generated art into their services. Both Shutterstock and Microsoft are leveraging OpenAI’s Dall-E model in their products. No litigation has been filed as of yet against these other generative models.

Organizations and people have submitted comments to the 2022 Review of Notorious Markets for Counterfeiting and Piracy by the Office of United States Trade Representative. Related to the ongoing copyright disputes around AI systems is the comment from the Recording Industry Association of America which seeks to flag AI music mixing software as digital piracy. The organization argues that training AI models on members’ music “is unauthorized and infringes our members’ rights by making unauthorized copies of our members’ works. In any event, the files these services disseminate are either unauthorized copies or unauthorized derivative works of our members’ music.”

A proposed U.S. bill would bring back patenting of software, including AI algorithms. The U.S. Supreme Court’s decision in Alice Corp. v. CLS Bank decision has made it difficult to patent software. See the Electronic Frontier Foundation’s post for some critical discussion.

The United States Department of Health and Human Services has a new proposed rule on Nondiscrimination in Health Programs and Activities. This rule “states that a covered entity must not discriminate against any individual on the basis of race, color, national origin, sex, age, or disability through the use of clinical algorithms in its decision-making.” This is after the FDA released its plan for medical AI software last month. In parallel, the UK Medicines & Healthcare products Regulatory Agency has put out the “Software and AI as a Medical Device Change Programme - Roadmap,” which seeks to provide more assurances that software within medical settings is well-tested.

Policy

The United States has continued to expand export controls against China’s technology sector. In a series of new rules, summarized here, U.S. persons must apply for special licenses to transfer any item to China that is used for chip manufacturing. The rules also target items that are the direct products of “U.S.-origin” software and technology. The Biden Administration is allegedly eyeing other control mechanisms to further expand this effort, including potentially limiting export of certain types of AI software. The Department of Commerce Bureau of Industry and Security (“BIS”) noted that one of the goals of these export controls was to curtail the development of AI technologies that enable violations of human rights.

The White House put out the Blueprint for an AI Bill of Rights: A Vision for Protecting Our Civil Rights in the Algorithmic Age. The blueprint, which proposes a set of principles to improve the safety of AI systems and prevent discrimination, has been both praised and critiqued. In particular, it is not binding on its own and thus can only provide guidance without enforcement. However, discussion from LawFare notes that it sets the groundwork for federal agencies to use their authority to operationalize the principles.

Canada’s House of Commons has put out a report on facial recognition that looks at the benefits and risks of using facial recognition in law enforcement contexts and, among other recommendations, suggests imposing a “moratorium on the use of [facial recognition technology] in Canada.”

The United States National Artificial Intelligence Advisory Committee held its first public field hearing and second meeting at Stanford this month to discuss global AI competitiveness and collaboration, AI R&D, and the AI workforce. Stanford Professor Daniel E. Ho gave comments on the importance of investing in public-sector applications of AI, noting that the majority of AI PhDs go into industry rather than academia or the public sector.

Oakland has reversed course and will not be using armed robots after significant pushback. See also the Electronic Frontier Foundation’s discussion here. (On the other hand, China Kestrel Defense posted a video of their armed robot dog being delivered via a quadcopter.)

Legal Academia AI Roundup

Bryant Walker Smith, DALL-E Does Palsgraf by Bryant Walker Smith. Uses Dall-E to illustrate an important torts case that is usually taught to first-year law students.

Amici Brief of Science, Legal, and Technology Scholars in Renderos et al. v. Clearview AI, Inc. et al. Academics look to file a brief in a case against Clearview AI’s facial recognition system, bringing up a number of important arguments, including the California right of publicity that protects a person’s identity from being used without their permission (with some nuance).

Mehtab Khan and Alex Hanna, The Subjects and Stages of AI Dataset Development: A Framework for Dataset Accountability. Breaks down different parts of the dataset curation process and examines legal issues and potential harms for each, including potential copyright infringement and privacy law violations.

Johann Laux, Sandra Wachter, and Brent Mittelstadt, Trustworthy Artificial Intelligence and the European Union AI Act: On the Conflation of Trustworthiness and the Acceptability of Risk. Arguing that the EU AI Act conflates ‘trustworthiness’ with ‘acceptability of risks,’ which can be problematic according to the authors.

Aude Cefaliello and Miriam Kullman, Offering False Security: How the Draft Artificial Intelligence Act Undermines Fundamental Workers Rights.

I. Elizabeth Kumar, Keegan E. Hines, and John P. Dickerson, Equalizing Credit Opportunity in Algorithms: Aligning Algorithmic Fairness Research with U.S. Fair Lending Regulation. A paper that tries to operationalize and align algorithmic fairness research with the realities of U.S. law. This kind of work is important because there is often a disconnect between what antidiscrimination law allows and what algorithmic fairness researchers propose.

Ellen P. Goodman and Julia Trehu, AI Audit Washing and Accountability. A paper arguing that the lack of specificity for proposed AI audit requirements could result in audit washing, where audits do not actually provide the assurances that regulators would hope for. This is an important piece for thinking about how regulations requiring audits should be crafted.

Who am I? I’m a PhD (Machine Learning)-JD candidate at Stanford University and Stanford RegLab fellow (you can learn more about my research here). Each month I round up interesting news and events somewhere at the intersection of Law, Policy, and AI. Feel free to send me things that you think should be highlighted @PeterHndrsn. Also… just in case, none of this is legal advice, and any views I express here are purely my own and are not those of any entity, organization, government, or other person.

.jpg&w=256&q=80)

.jpg&w=1920&q=100)