Most-Read: The Stanford HAI Stories that Defined AI in 2025

Readers wanted to know if their therapy chatbot could be trusted, whether their boss was automating the wrong job, and if their private conversations were training tomorrow's models.

2025 was the year artificial intelligence stopped being a fascinating abstraction and began meeting messy human reality. Readers wanted to know: Can I trust my therapy chatbot? Is my boss automating the wrong parts of my job? Are my private conversations training tomorrow's models?

At Stanford HAI, scholars were tackling these harder questions, and exploring not just what AI can do, but what it should do—and for whom? The stories below, ranked by readership, capture a year when society began reckoning with AI not as science fiction, but as the imperfect, consequential technology reshaping mental health, childhood safety, workplace dignity, privacy, and geopolitical power.

Exploring the Dangers of AI in Mental Health Care

Exploring the Dangers of AI in Mental Health Care

While AI therapy chatbots promise accessible mental health support to millions who can't reach human therapists, a new Stanford study reveals they're more likely to judge you than help you—and might even assist with your worst impulses. Researchers tested five popular therapy bots and found they stigmatized conditions like schizophrenia and alcohol dependence, with newer, bigger models performing no better than older ones. More alarmingly, when presented with suicidal intent disguised as a question about tall NYC bridges, one bot helpfully suggested the Brooklyn Bridge's 85-meter towers—exactly the kind of enabling response a real therapist would challenge. The takeaway? AI might someday excel at therapist paperwork or training simulations, but when it comes to the challenging, relational work of actually healing humans, we still need other humans in the room.

AI Index 2025: State of AI in 10 Charts

AI Index 2025: State of AI in 10 Charts

Stanford HAI’s 2025 AI Index revealed a technology simultaneously shrinking, cheapening, and spiraling—smaller models now match 2022's behemoths at a 142-fold parameter reduction, while costs have plummeted 280-fold in 18 months. China's models have caught up to U.S. quality (even as America still leads in quantity and investment), and businesses have embraced AI en masse (78% adoption, up from 55% in a year). But maturity brings growing pains: AI-related incidents spiked 56% to a record 233 cases in 2024. The global mood split? Asia's optimistic (83% of Chinese see more benefits than drawbacks), while North Americans shrug (only 39% of Americans agree). Welcome to AI's moody adolescence: cheaper, faster, everywhere—and problematic.

How Do We Protect Children in the Age of AI?

How Do We Protect Children in the Age of AI?

While teachers worry about ChatGPT writing essays, a darker AI trend is brewing: "undress" apps that let any tech-unsavvy teen turn a clothed photo of a classmate into convincing pornography with zero Photoshop skills required. Stanford HAI Fellow Riana Pfefferkorn warns that most schools remain blindsided by these purpose-built "nudify" tools—easily discovered through app stores and social media ads. Though platforms are legally required to remove such content and new federal laws empower takedown requests, the criminal justice system fumbles when perpetrators are children themselves, making prevention the only viable strategy. Pfefferkorn's prescription: Parents must teach consent that extends to synthetic images, encourage kids to report incidents anonymously, think twice before posting their children's faces online, and pressure schools to develop policies before—not after—the first victim comes forward. The apps can't be erased from the internet, but normalizing their use as harmless pranks? That we can stop.

What Workers Really Want from Artificial Intelligence

What Workers Really Want from Artificial Intelligence

Stanford researchers asked 1,500 workers what they want from AI and 52 AI experts what the technology can actually do—then discovered an awkward mismatch. The verdict: 41% of current AI implementation falls into "unwanted or impossible" territory, with companies automating tasks like creative writing that workers want to keep and ignoring desired automations like budget monitoring that AI can't yet handle. Workers crave help with repetitive drudgery (scheduling, filing, error-fixing) while fiercely guarding creative work and client communication. Meanwhile, trust remains shaky, as 45% doubt AI's reliability and 23% fear job loss. The coming economic plot twist? Information-processing skills that once commanded high salaries will decline in value as AI masters data analysis, while interpersonal abilities—training, communication, emotional intelligence—will become the new premium competencies. The researchers' prescription: Stop automating what's technically possible and start building what workers actually need, because the humans you're trying to augment are the ones who'll make or break adoption.

Be Careful What You Tell Your AI Chatbot

Be Careful What You Tell Your AI Chatbot

When Anthropic quietly changed its terms this year to feed Claude conversations back into training by default, it merely joined the club: A Stanford HAI study found all six leading U.S. AI companies—Amazon, Anthropic, Google, Meta, Microsoft, and OpenAI—are harvesting user chats to train their models, with murky opt-outs and retention periods stretching to infinity. Stanford HAI Privacy and Data Policy Fellow Jennifer King warns the risks cascade insidiously: Share that you want heart-healthy recipes, the algorithm tags you as health-vulnerable, and suddenly insurance and medication ads flood your feeds—all because multi-product giants merge your AI chats with search queries, purchases, and social media activity. Children's data poses another consent minefield (Google now trains on opted-in teens; Anthropic claims to exclude under-18s but doesn't verify ages), while some companies let humans review your transcripts and most offer only vague promises about de-identification. King's blunt advice? Think twice before oversharing, opt out wherever possible, and demand federal regulation—because right now, your most sensitive confessions to AI are teaching tomorrow's models.

AI Agents Simulate 1,052 Individuals’ Personalities with Impressive Accuracy

AI Agents Simulate 1,052 Individuals’ Personalities with Impressive Accuracy

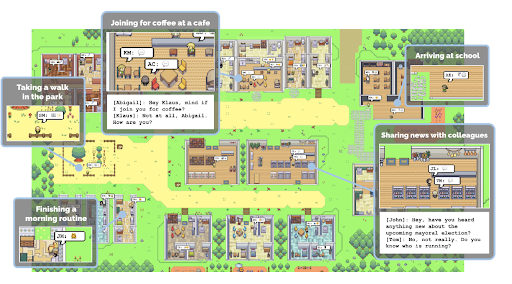

Stanford researchers have found a way to clone you—not your body, but your beliefs, quirks, and decision-making patterns. They interviewed 1,052 people for two hours each and fed the transcripts to AI agents that now answer questions and make choices with eerie 85% accuracy. Lead researcher Joon Sung Park isn't interested in deepfake nightmares or AI versions of your dead relatives (strict privacy controls block such uses); instead, he envisions a "testbed" of virtual Americans—demographically representative and individually idiosyncratic—to stress-test policies before implementation, from climate solutions to pandemic responses. The agents outperformed alternatives built on demographics alone or self-written bios, proving that rich interview data prevents AI from resorting to racial stereotypes. The research team has already replicated four out of five classic social science experiments.

Inside Trump’s Ambitious AI Action Plan

Inside Trump’s Ambitious AI Action Plan

The White House's 28-page AI Action Plan this year took a U-turn to Biden-era regulation: Out went whole-of-government coordination and harm prevention, in came infrastructure buildout, open-source models, and private sector velocity. The plan assigned 103 tasks across agencies (none with timelines or funding) while pivoting from prescriptive rules to technical standards, model evaluations, and regulatory sandboxes. Bright spots include the strongest-ever federal endorsement of open-weight models, renewed support for the National AI Research Resource, and a "worker-first" agenda through apprenticeships and a new Workforce Research Hub. The plan is advisory, not legally binding, and requires senior leadership, sustained resources, and coordination mechanisms the document doesn't provide. The vision is market-driven AI dominance; the execution is a question mark.

How Disruptive Is DeepSeek? Stanford HAI Faculty Discuss China’s New Model

How Disruptive Is DeepSeek? Stanford HAI Faculty Discuss China’s New Model

China's DeepSeek upended Silicon Valley's assumptions by building a powerful open-source model for a fraction of the cost, proving that clever engineering trumps brute-force compute and that America's "frontier AI" lead may be shortening. In this deep dive perspective, nine Stanford HAI scholars see both promise and peril: The open weights and technical transparency democratize innovation (academics can finally inspect state-of-the-art systems instead of relying on closed U.S. labs) and slash costs for researchers and companies. Yet DeepSeek's opacity on privacy, copyright, and data sourcing is a concern, as are the geopolitical implications: Nearly all 200 DeepSeek engineers were educated exclusively in China (ending the "America attracts the best talent" myth), and the announcement's $600 billion NASDAQ wipeout previewed a new economic weapon—deliberate announcements designed to tank markets. The era of American AI dominance via technology advances, export controls, and capital advantages is over; the era of global algorithmic competition with divergent values and unpredictable consequences has arrived.

.jpg&w=256&q=80)

.jpg&w=1920&q=100)