Robotics

AI enables robots to perform increasingly complex tasks across sectors, from manufacturing to healthcare.

QuantiPhy is a new benchmark and training framework that evaluates whether AI can numerically reason about physical properties in video images. QuantiPhy reveals that today’s models struggle with basic estimates of size, speed, and distance but offers a way forward.

QuantiPhy is a new benchmark and training framework that evaluates whether AI can numerically reason about physical properties in video images. QuantiPhy reveals that today’s models struggle with basic estimates of size, speed, and distance but offers a way forward.

Robots are becoming a core building block in engineering and healthcare applications, altering the way many industries operate, and improving quality of life for everyone. With AI, robots are further given the ability to learn and adapt so that they can work collaboratively alongside humans and other robots in real-world environments. This industry brief provides a cross-section of key research – at HAI and across Stanford – that leverages AI methods into new algorithms for human robot interaction and robot navigation. Discover how researchers are designing intelligent robots that learn and adapt to human demonstration, and how they could be used to disrupt and create markets in a wide range of industries including manufacturing, healthcare, autonomous vehicles, and many more.

Robots are becoming a core building block in engineering and healthcare applications, altering the way many industries operate, and improving quality of life for everyone. With AI, robots are further given the ability to learn and adapt so that they can work collaboratively alongside humans and other robots in real-world environments. This industry brief provides a cross-section of key research – at HAI and across Stanford – that leverages AI methods into new algorithms for human robot interaction and robot navigation. Discover how researchers are designing intelligent robots that learn and adapt to human demonstration, and how they could be used to disrupt and create markets in a wide range of industries including manufacturing, healthcare, autonomous vehicles, and many more.

Increasingly large robotics datasets are being collected to train larger foundation models in robotics. However, despite the fact that data selection has been of utmost importance to scaling in vision and natural language processing (NLP), little work in robotics has questioned what data such models should actually be trained on. In this work we investigate how to weigh different subsets or "domains'' of robotics datasets during pre-training to maximize worst-case performance across all possible downstream domains using distributionally robust optimization (DRO). Unlike in NLP, we find that these methods are hard to apply out of the box due to varying action spaces and dynamics across robots. Our method, ReMix, employs early stopping and action normalization and discretization to counteract these issues. Through extensive experimentation on both the Bridge and OpenX datasets, we demonstrate that data curation can have an outsized impact on downstream performance. Specifically, domain weights learned by ReMix outperform uniform weights by over 40% on average and human-selected weights by over 20% on datasets used to train the RT-X models.

Increasingly large robotics datasets are being collected to train larger foundation models in robotics. However, despite the fact that data selection has been of utmost importance to scaling in vision and natural language processing (NLP), little work in robotics has questioned what data such models should actually be trained on. In this work we investigate how to weigh different subsets or "domains'' of robotics datasets during pre-training to maximize worst-case performance across all possible downstream domains using distributionally robust optimization (DRO). Unlike in NLP, we find that these methods are hard to apply out of the box due to varying action spaces and dynamics across robots. Our method, ReMix, employs early stopping and action normalization and discretization to counteract these issues. Through extensive experimentation on both the Bridge and OpenX datasets, we demonstrate that data curation can have an outsized impact on downstream performance. Specifically, domain weights learned by ReMix outperform uniform weights by over 40% on average and human-selected weights by over 20% on datasets used to train the RT-X models.

Saw, Sword, or Shovel: AI Spots Functional Similarities Between Disparate Objects

With a new computer vision model that recognizes the real-world utility of objects in images, researchers at Stanford look to push the boundaries of robotics and AI.

With a new computer vision model that recognizes the real-world utility of objects in images, researchers at Stanford look to push the boundaries of robotics and AI.

Real-world planning problems, including autonomous driving and sustainable energy applications like carbon storage and resource exploration, have recently been modeled as partially observable Markov decision processes (POMDPs) and solved using approximate methods. To solve high-dimensional POMDPs in practice, state- of-the-art methods use online planning with problem-specific heuristics to reduce planning horizons and make the problems tractable. Algorithms that learn approximations to replace heuristics have recently found success in large-scale fully observable domains. The key insight is the combination of online Monte Carlo tree search with offline neural network approximations of the optimal policy and value function. In this work, we bring this insight to partially observable domains and propose BetaZero, a belief-state planning algorithm for high-dimensional POMDPs. BetaZero learns offline approximations that replace heuristics to enable online decision making in long-horizon problems. We address several challenges inherent in large-scale partially observable domains; namely challenges of transitioning in stochastic environments, prioritizing action branching with a limited search bud- get, and representing beliefs as input to the network. To formalize the use of all limited search information, we train against a novel Q-weighted visit counts policy. We test BetaZero on various well-established POMDP benchmarks found in the literature and a real-world problem of critical mineral exploration. Experiments show that BetaZero outperforms state-of-the-art POMDP solvers on a variety of tasks.1

Real-world planning problems, including autonomous driving and sustainable energy applications like carbon storage and resource exploration, have recently been modeled as partially observable Markov decision processes (POMDPs) and solved using approximate methods. To solve high-dimensional POMDPs in practice, state- of-the-art methods use online planning with problem-specific heuristics to reduce planning horizons and make the problems tractable. Algorithms that learn approximations to replace heuristics have recently found success in large-scale fully observable domains. The key insight is the combination of online Monte Carlo tree search with offline neural network approximations of the optimal policy and value function. In this work, we bring this insight to partially observable domains and propose BetaZero, a belief-state planning algorithm for high-dimensional POMDPs. BetaZero learns offline approximations that replace heuristics to enable online decision making in long-horizon problems. We address several challenges inherent in large-scale partially observable domains; namely challenges of transitioning in stochastic environments, prioritizing action branching with a limited search bud- get, and representing beliefs as input to the network. To formalize the use of all limited search information, we train against a novel Q-weighted visit counts policy. We test BetaZero on various well-established POMDP benchmarks found in the literature and a real-world problem of critical mineral exploration. Experiments show that BetaZero outperforms state-of-the-art POMDP solvers on a variety of tasks.1

All Work Published on Robotics

With a first-of-its-kind competition for roboticists everywhere, researchers at Stanford are hoping to push domestic robotics into a new age of autonomy and capability.

With a first-of-its-kind competition for roboticists everywhere, researchers at Stanford are hoping to push domestic robotics into a new age of autonomy and capability.

Mykel Kochenderfer

Stanford HAI Conference Explores Robotics in a Human-Centered World: Hype, Hope, and Future Directions

Scholars zeroed in on the need for data, generalization, and better human experience.

Scholars zeroed in on the need for data, generalization, and better human experience.

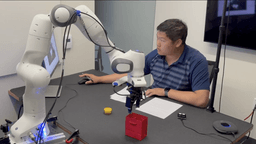

Stanford’s Vocal Sandbox brings us closer to robots that can adapt, learn, and assist in real time.

Stanford’s Vocal Sandbox brings us closer to robots that can adapt, learn, and assist in real time.

Stanford HAI co-director Fei-Fei Li says the next frontier in AI lies in advancing spatial intelligence. In this op-ed, she explains how enabling machines to perceive and interact with the world in 3D can unlock human-centered AI applications for robotics, healthcare, education, and beyond.

Stanford HAI co-director Fei-Fei Li says the next frontier in AI lies in advancing spatial intelligence. In this op-ed, she explains how enabling machines to perceive and interact with the world in 3D can unlock human-centered AI applications for robotics, healthcare, education, and beyond.