Stanford Research Teams Receive New Hoffman-Yee Grant Funding for 2025

Five teams will use the funding to advance their work in biology, generative AI and creativity, policing, and more.

Stanford HAI has selected five interdisciplinary research teams from the 2024 cohort of Hoffman-Yee grant winners to receive additional financial support for their groundbreaking work in the field of AI.

With funding made possible by a gift from philanthropists Reid Hoffman and Michelle Yee, Hoffman-Yee Research Grants encourage bold ideas that align with HAI’s three research priorities: understanding the human and societal impact of AI, augmenting human capabilities, and developing AI technologies inspired by human intelligence.

Each winning team from 2024 received $500,000 in year one, and five of those teams have just been awarded up to $2 million each to continue their research over the next two years. To date, the Hoffman-Yee Research Grant program has distributed $27.6 million including this most recent round of funding.

“Hoffman-Yee Research Grants are given to teams that show boldness, ingenuity, and potential for transformative impact in human-centered AI,” said Stanford HAI Co-Director James Landay. “We believe these projects will play a significant role in defining future work in AI, from academia to industry, government, and civil society.”

The competition for additional funding took place over two days in October. On the first day, teams presented updates to their work during the public Hoffman-Yee Symposium, which drew 175 in-person attendees and more than 600 virtual participants. On the following day, teams held private interviews with the Hoffman-Yee Research Grants selection committee. Each recipient of follow-on funding provided detailed project updates.

Here are the awardees:

Integrating Intelligence: Building Shared Conceptual Grounding for Interacting with Generative AI

Modern society communicates visually, through images, video, animation, and 3D virtual environments. Yet, creating immersive visual content requires specialized design expertise. Generative AI promises to be a collaborative assistant that helps humans create content, but to realize this potential, humans and GenAI tools need a shared conceptual grounding. This team aims first to understand the concepts we use when creating visual content and the mechanisms we need to establish conceptual grounding with others. Next, the researchers seek to build tools grounded in human concepts by teaching the concepts to the AI, and teaching the AI how to collaborate using those concepts.

The Integrating Intelligence team notes the challenges of generative AI tools their research hopes to overcome during October's Hoffman-Yee Symposium.

The Integrating Intelligence team notes the challenges of generative AI tools their research hopes to overcome during October's Hoffman-Yee Symposium.

In 2025, the scholars started several projects to capture recordings of human creation processes and characterize the concepts that emerge from them. They also analyzed how humans interact with one another in creative processes to understand how they communicate ideas and what they expect of others. For example, they looked at human-produced sketches and text-based instructions for creating a design in CAD, as well as human gestures and verbal instructions for another human to build a specific LEGO block tower. In parallel, the team reported progress in beginning to teach these creative concepts to various AI systems.

This initial work generated measurable impact as the researchers presented at top conferences, informed product development at companies such as video game maker Roblox and Reve, a company that builds creative tools, and released their work to the open-source community. Notably, these efforts also paved the way for a new interdisciplinary community at Stanford focused on human-centered design with AI.

A Human-Centered AI Virtual Cell

In 2024, an interdisciplinary team representing biological and AI domains came together around a vision of combining unprecedented amounts of biological data with AI to create virtual cells. So far, the team has developed multimodal AI models spanning different types of biological data, including sequence data (DNA, RNA, proteins), structural data, and image data. To further this work, the scholars have integrated these data types across biological scales, from molecules to cells to tissues; demonstrated spatially and structurally aware modeling; and developed several AI assistants for biologists – all in their first year of research.

One of the team’s open-source initiatives, called Biomni, is a biomedical AI agent that can review literature, analyze data, and generate hypotheses across diverse fields such as gene prioritization, drug repurposing, rare disease diagnosis, microbiome analysis, and molecular cloning. Biomni users enter their queries through an intuitive web interface, and the agent takes over, creating a step-by-step plan and composing the necessary workflows dynamically, adjusting as it goes, without the need for predefined templates.

“This additional Hoffman-Yee funding enables us to pursue our bold vision of building virtual cell models to advance our understanding of drug response,” said Emma Lundberg, associate professor of bioengineering and of pathology at Stanford. We are thrilled about the continued partnership and look forward to a future of multimodal, multiscale modeling and innovative lab-in-the-loop settings, with the ultimate aim of impacting human lives by supporting the development of better drugs.”

AI & Body-Worn Cameras: Improving Police-Community Relations

What if we could draw insights from body-worn camera footage to repair the relationship between law enforcement officers and the communities they are sworn to serve? This team of researchers has used AI-powered analytic tools to integrate learnings from body-worn camera footage into the training, evaluation, and reform efforts of law enforcement departments, assembling one of the largest research-focused policing repositories created to date.

Professor Jennifer Eberhardt details the latest findings of her team's AI and policing research at the symposium.

Professor Jennifer Eberhardt details the latest findings of her team's AI and policing research at the symposium.

Such unprecedented scale unlocks new possibilities for training better AI models on real-world interactions; asking questions across interaction types, across officers, and over time periods that were previously impossible; examining relations between different types of encounters and social networks of officers; and gaining a bird’s-eye view of the entire face-to-face police-community relationship for a given department.

“Our initial findings demonstrate the potential of our approach: combining massive datasets, advanced AI tools, and interdisciplinary expertise to evaluate policy effectiveness and identify practices that reduce escalation and build trust,” said Stanford social psychologist and project lead Jennifer Eberhardt.

A Multimodal Odyssey into the Human Mind

Last year, this winning team set out to build a large-scale, multimodal brain foundation model to accelerate fundamental and automated neuroscience discovery, transform artificial intelligence and robotics, and enable precision diagnostics and personalized treatments. The scholars say what started as a core technical effort quickly evolved into a vibrant intellectual community, bringing together researchers from diverse fields across Stanford and beyond.

In their first year, the researchers published several papers outlining core building blocks for the Brain World Model, including model encoders and generative backbones, data anchors, and evaluation metrics and protocols.

“The HAI Hoffman-Yee funding has been catalytic for our work, enabling us to pursue a genuinely high-risk, high-reward vision of building foundation models of the human brain by bringing together researchers from neuroscience, AI, imaging, genetics, and clinical science,” said Ehsan Adeli, assistant professor of psychiatry and behavioral sciences at Stanford. “This support has created a level of cross-disciplinary collaboration that would not have been possible otherwise and allows us to explore bold, unconventional ideas that fall outside traditional funding models. It is helping us build a new community around one of the most ambitious goals in science: understanding the human mind in all its complexity.”

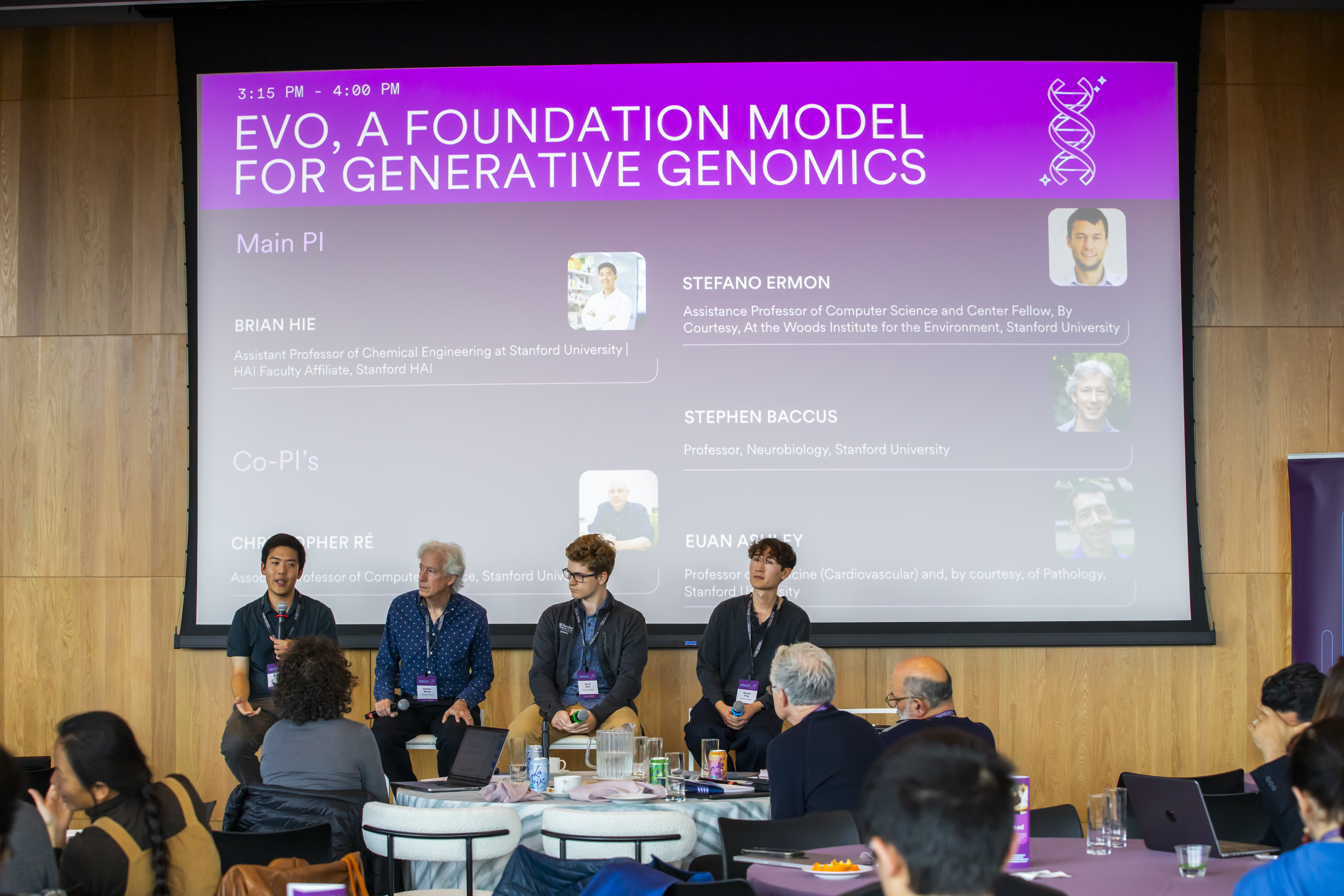

Evo: A Foundation Model for Generative Genomics

The team behind Evo has achieved remarkable productivity in advancing AI for biology and human health, delivering frontier biological foundation models with a strong commitment to open science. In 2024, The Atlantic named Evo 1 one of “The Most Important Breakthroughs of 2024.” Now, Evo 2 is gaining traction, just eight months into its release.

Evo 2 is trained on 9.3 trillion DNA base pairs from a highly curated genomic atlas spanning all domains of life. It accurately predicts functional impacts of genetic variations, without task-specific fine-tuning. Moreover, it is fully open-source, meaning the researchers have shared their model parameters, training code, and dataset to spur collaborative innovation.

Assistant Professor Brian Hie and the EVO team share research highlights of their work.

Assistant Professor Brian Hie and the EVO team share research highlights of their work.

“Our project is developing foundation models of genomes that could help decipher human biology and design treatments for human health. The project has been recognized by scientists around the world and was kickstarted in large part by the Hoffmann-Yee grant. Our work would not be possible without this support,” said Brian Hie, assistant professor of chemical engineering at Stanford.

Interested in applying for a Hoffman Yee Research Grant? We’re currently calling for proposals for 2026. Letters of intent are due by Jan. 28, 2026.

Learn more about the Hoffman-Yee Research Grant program or watch the research conversations from this year's Hoffman-Yee Symposium.

.jpg&w=256&q=80)

.jpg&w=1920&q=100)