Stanford RegLab, Princeton, and the County of Santa Clara Collaborate to Use AI to Identify and Map Racial Covenants from over 5 Million Deed Records

The approach paves the way for faster and more accurate compliance with California’s anti-discrimination law.

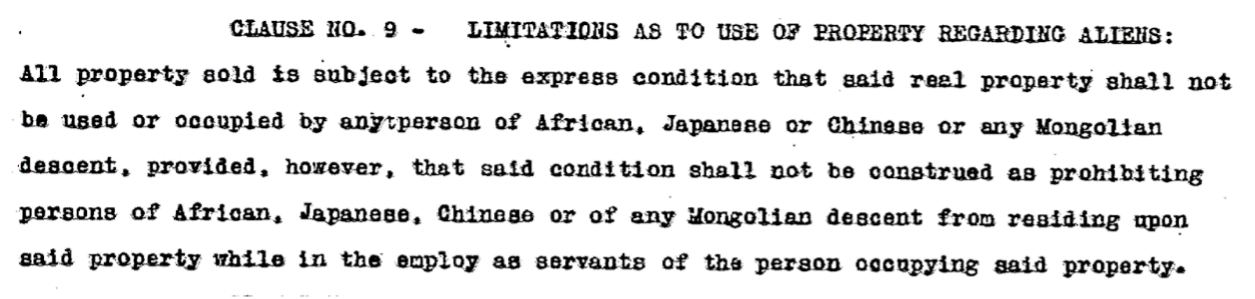

When Dan Ho purchased a home in Palo Alto, he recounts, “We had to sign papers that said that the ‘property shall not be used or occupied by any person of African, Japanese or Chinese or any Mongolian descent,’ except for the capacity of a servant to a White person.”

“It was a stunning testament to housing discrimination in the area and it’s been constitutionally unenforceable since 1948,” said Ho, the William Benjamin Scott and Luna M. Scott Professor of Law, Director of RegLab, and Senior Fellow at Stanford University Human-Centered Artificial Intelligence, or HAI.  Example of a racially restrictive covenant.

Example of a racially restrictive covenant.

Despite the Supreme Court holding such clauses unenforceable, racially restrictive covenants still litter deed records across the country.

In 2021, California enacted a law that required the state’s 58 counties to create programs to identify and redact deed records that include racial covenants.

But the new law also posed a daunting task: Santa Clara County alone has 24 million deed documents, totaling 84 million pages, dating back to 1850. “Prior to this collaboration, our team manually read close to 100,000 pages over weeks to identify racial covenants,” Assistant County Clerk-Recorder Louis Chiaramonte said, “and it was a challenging undertaking.”

To comply with the law, some California counties contracted with commercial vendors. Los Angeles County, for instance, hired a company for $8 million to conduct this scan over seven years. In other jurisdictions, teams of citizens have valiantly crowdsourced these efforts with thousands of volunteers pouring over deed records. But not all jurisdictions have such resources.

The County of Santa Clara, home to Silicon Valley, approached it differently. Stanford University’s Regulation, Evaluation, and Governance Lab (RegLab) partnered with the county to use the power of AI – and large language models, specifically – to assist in this monumental task.

“The County of Santa Clara has been proactively going through millions of documents to remove discriminatory language from property records,” said Chief Operating Officer Greta Hansen. “We’re grateful for our partnership with Stanford, which has helped the county substantially expedite this process, saving tax-payer dollars and staff time.”

The team, led by Stanford’s RegLab and including HAI affiliates and Princeton Professor Peter Henderson, curated a collection of racial covenants from various jurisdictions in the country and trained a state-of-the-art open language model to detect racial covenants, with almost perfect accuracy. “We estimate that this system will save 86,500 person hours and cost less than 2% of what comparable proprietary models would have,” said co-lead author Faiz Surani, a fellow with the RegLab. The team zoomed in on 5.2 million deed records between 1902-1980, the period most at issue.

The team also figured out a way to cross-reference historical maps to locate most of these properties. They matched textual descriptions of maps (e.g., “Map [that] was recorded . . . June 6, 1896, in Book ‘I’ of Maps at page 25.”) to administrative records in the Santa Clara County Surveyor’s Office to geolocate tracts. “Of all the items coming out of the state law, we thought that mapping could have been nearly impossible,” Chiaramonte said.

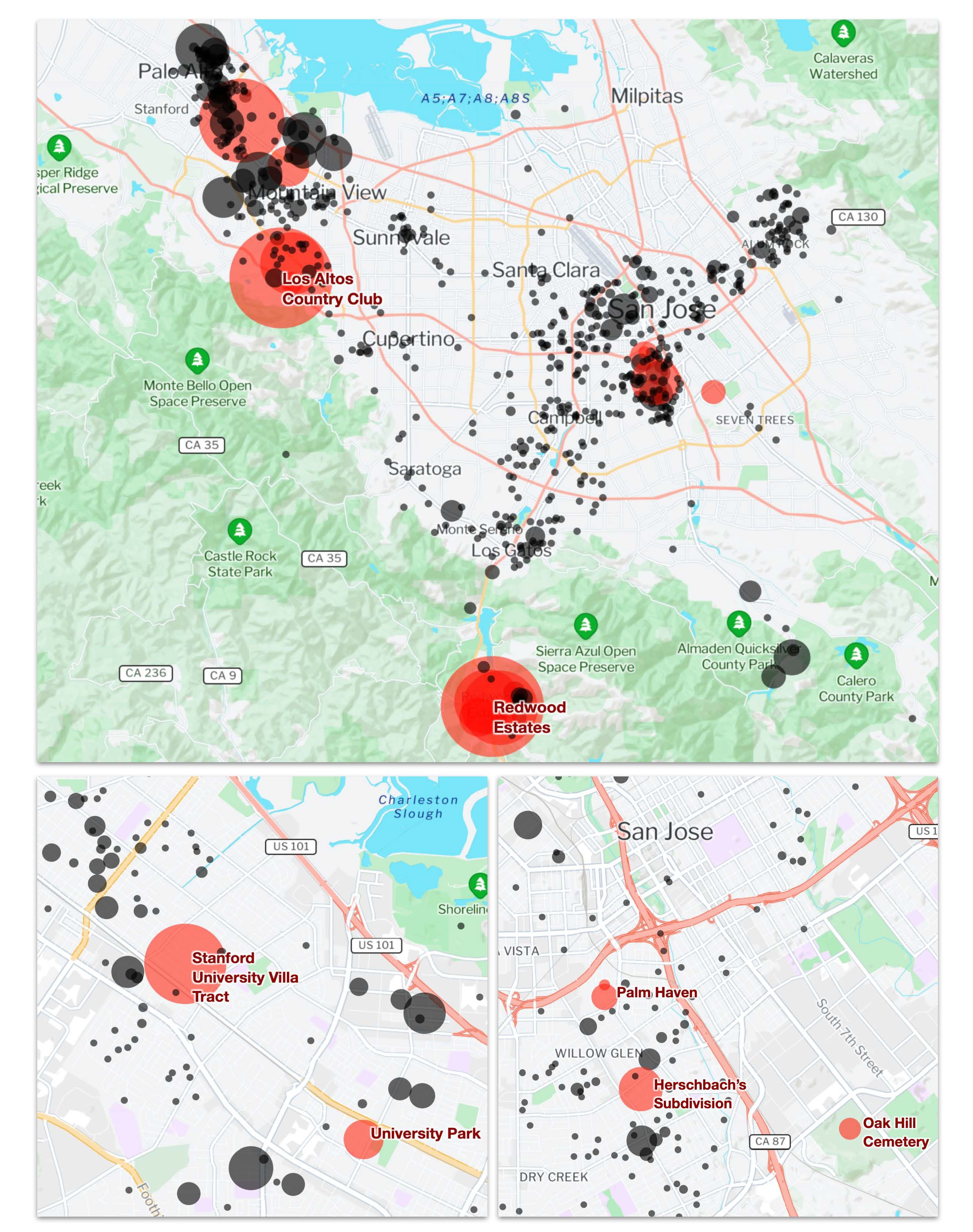

These maps revealed extraordinary insights into the evolution and diffusion of racial covenants in Santa Clara County:

The team estimated that one in four properties in Santa Clara County were subject to racial covenants as of 1950.

Only 10 developers were responsible for a third of the identified covenants, suggesting that developers had a lot of say in how Santa Clara County was constructed.

Racial covenants excluded African Americans at the same rate as Asian Americans, even when African American residents were less than a tenth the size of the Asian American population.

The team found a striking instance of a San Jose-owned cemetery that included burial deeds only for “Caucasians.” This goes against the conventional historical account that racial covenants were used only between private parties after the Supreme Court banned state-based racial zoning.

These maps show where racially restrictive covenants have been found.

These maps show where racially restrictive covenants have been found.

“We believe this is a compelling illustration of an academic-government collaboration to make this kind of legislative mandate much easier to achieve and to shine a light on historical patterns of housing discrimination,” said co-lead Mirac Suzgun, a JD/PhD student in computer science. Chiaramonte agreed: “This collaboration paved a new path for how we can use technology to achieve the momentous mandate to identify, map, and redact racial covenants. It reduced the amount of time our team needed to review historical documents dramatically."

The team has released the paper and is making the model available to enable all jurisdictions faced with this task to identify, redact, and develop historical registers of racial covenants more effectively.

Faiz Surani is a research fellow with the Stanford RegLab.

Mirac Suzgun is a J.D/Ph.D. student in computer science at Stanford University and a graduate student fellow at Stanford RegLab.

Vyoma Raman was a summer fellow at the Stanford RegLab and is an M.S. student in computer science.

Christopher D. Manning is Thomas M. Siebel Professor of Machine Learning, Professor of Linguistics and Computer Science, and Senior Fellow at HAI.

Peter Henderson is Assistant Professor at Princeton University in the Department of Computer Science and the School of Public and International Affairs and is an affiliate of the RegLab.

Daniel E. Ho is the William Benjamin Scott and Luna M. Scott Professor of Law, Professor of Political Science, Professor of Computer Science (by courtesy), Senior Fellow at Stanford’s Institute for Human-Centered AI, Senior Fellow at SIEPR, and Director of the RegLab at Stanford University.