Transparency in AI is on the Decline

A new study shows the AI industry is withholding key information.

AI companies, and the AI industry as a whole, have never been more important. Seven established AI companies including Google and Meta account for more than 35% of the entire S&P 500. Startups like OpenAI and Anthropic are the most valuable private companies in history. Billions of people are now using foundation models to search for information, assist in writing, and generate images, video, and audio. But how transparent are the companies building our collective future?

Today, our team of researchers from Stanford, Berkeley, Princeton and MIT published the 2025 Foundation Model Transparency Index as the third edition of the annual Foundation Model Transparency Index. We find that transparency has declined since 2024 with companies diverging greatly in the extent to which they are transparent.

The 2025 FMTI scored 13 companies, finding large discrepancies in the extent to which companies prioritize transparency.

The 2025 FMTI scored 13 companies, finding large discrepancies in the extent to which companies prioritize transparency.

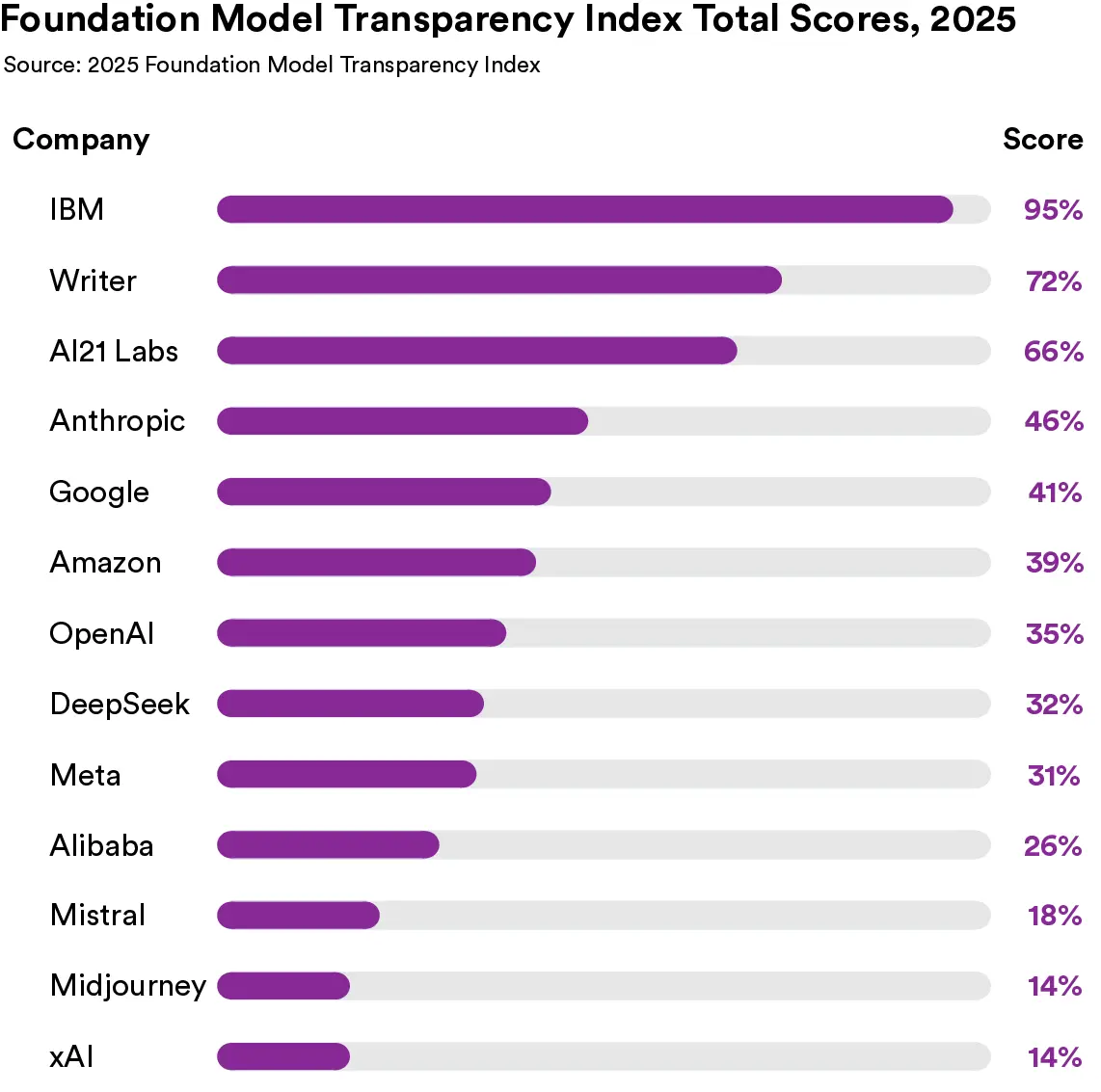

The Foundation Model Transparency Index is an annual report that comprehensively assesses the transparency of major AI companies in relation to their flagship models. Each company is scored on a 100-point scale that spans topics like training data, risk mitigation, and economic impact. This year’s index establishes that the industry-wide level of transparency is low. On a 100-point scale, companies scored just 40 points on average.

While the average is low, companies vary greatly in their practices. Three clusters emerge: top performers scored around 75, the middle of the pack scored around 35, and low scorers averaged around 15.

At the top, IBM scored the highest score in the Index’s history at 95/100. Valuably, IBM sets industry precedent by disclosing information on multiple indicators where no other company shares their practices. For example, IBM is the sole company to provide sufficient detail for external researchers to replicate their training data and to grant access to this data to external entities such as auditors.

On the other hand, xAI and Midjourney’s scores of 14/100 are among the lowest in the Index’s history. They share no information about the data used to build their models, the risks associated with their models, or steps they take to mitigate those risks. Overall, the thirteen companies we assess share little to no information about the environmental or societal impact of building and deploying their foundation models.

These patterns demonstrate that current transparency practices are primarily determined by the extent to which individual companies choose to prioritize transparency, rather than industry-wide incentives (or disincentives) for transparency.

The Foundation Model Transparency Index, which has tracked transparency since 2023, saw an overall dip in 2025 and a major reshuffle of top players.

The Foundation Model Transparency Index, which has tracked transparency since 2023, saw an overall dip in 2025 and a major reshuffle of top players.

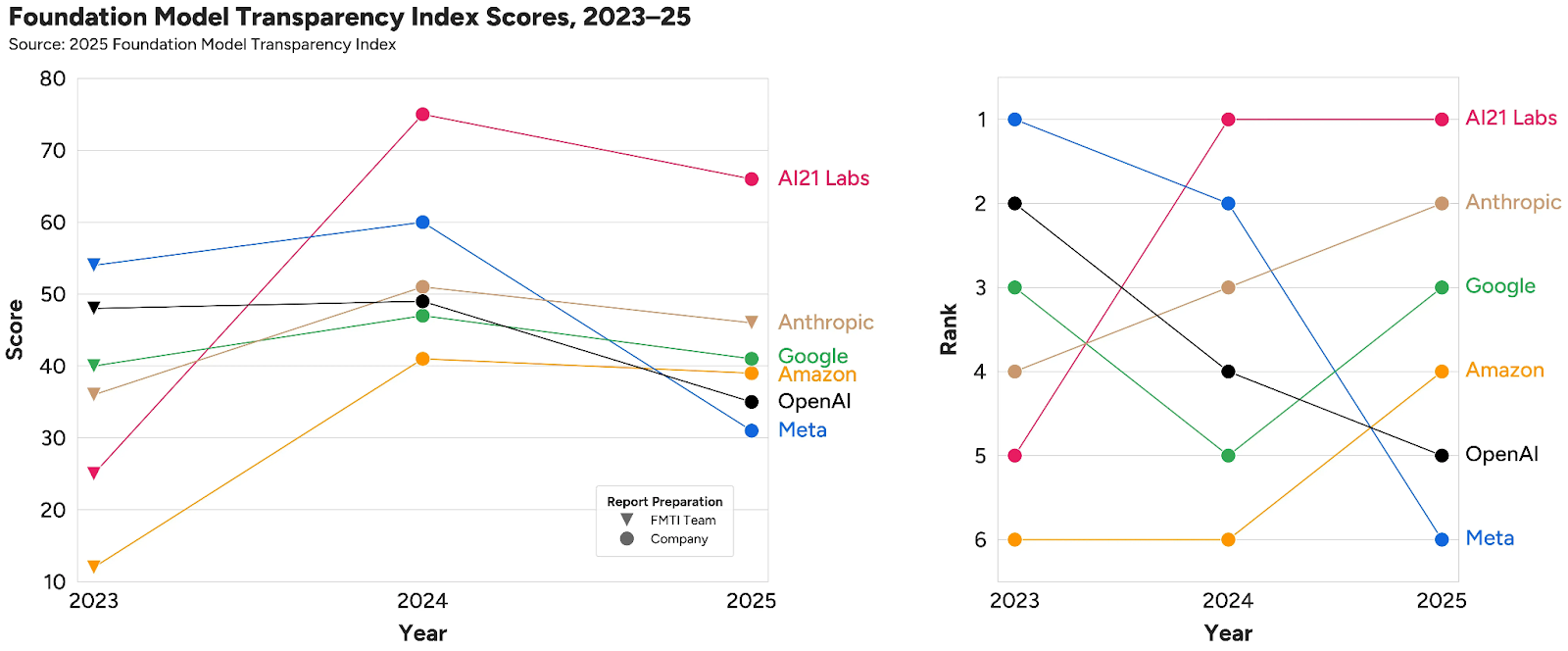

This work reveals the changing state of corporate transparency. Because the AI ecosystem itself has changed significantly over the past three years, the 2025 Index is the first edition to update the original indicators introduced in the 2023 edition. With this change in criteria in mind, scores have fallen from an average of 58/100 in 2024 to 40/100 in 2025. Individual companies have decreased their transparency significantly: Meta’s score dropped from 60 to 31 and Mistral’s score from 55 to 18. The 2025 edition also includes four companies for the first time (Alibaba, DeepSeek, Midjourney, xAI), including major Chinese companies for the first time. These companies all score in the bottom half of the Index.

Alongside the general decline in transparency, the ranking of companies has shaken up. Of the six companies we scored every year, Meta and OpenAI started in first and second in 2023, but now are last and second-to-last, respectively. In contrast, AI21 Labs has risen from second-to-last in 2023 to first in 2025. These score changes reflect major changes in transparency practices. Meta did not release a technical report for its Llama 4 flagship model. Google was significantly delayed in releasing a model card and technical report for Gemini 2.5, prompting scrutiny from British lawmakers in relation to public commitments they made to release these documents.

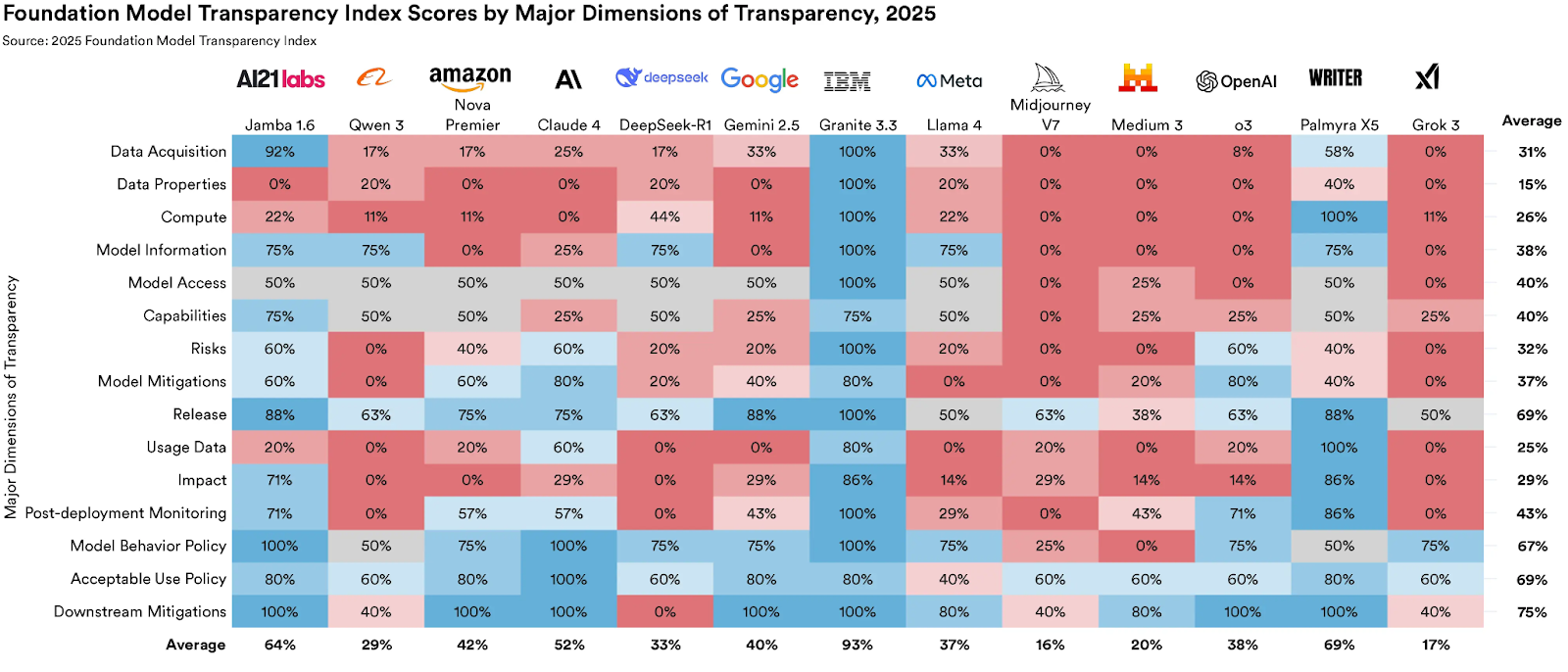

The Foundation Model Transparency Index scores companies on 15 major areas, including data acquisition, model access, and post-deployment monitoring.

The Foundation Model Transparency Index scores companies on 15 major areas, including data acquisition, model access, and post-deployment monitoring.

The Foundation Model Transparency Index clarifies the complex terrain of the current information ecosystem. The entire industry is systemically opaque about four critical topics: training data, training compute, how models are used, and the resulting impact on society. Each of these areas links foundation model developers to the broader AI supply chain. Given many of these areas have been consistently opaque across the last three years, this year’s Index highlights that these areas are ripe for policy intervention.

Little Information About Environmental Impact

Companies are highly opaque about the environmental impact of building foundation models. 10 companies disclose none of the key information related to environmental impact: AI21 Labs, Alibaba, Amazon, Anthropic, DeepSeek, Google, Midjourney, Mistral, OpenAI, and xAI. This includes no information about energy usage, carbon emissions, or water use. Companies withholding this information is particularly significant as massive investments in datacenters have strained the energy grid, contributing to increased energy prices in the U.S. and elsewhere.

Openness doesn’t guarantee transparency; major open developers like DeepSeek and Meta are quite opaque.

Openness doesn’t guarantee transparency; major open developers like DeepSeek and Meta are quite opaque.

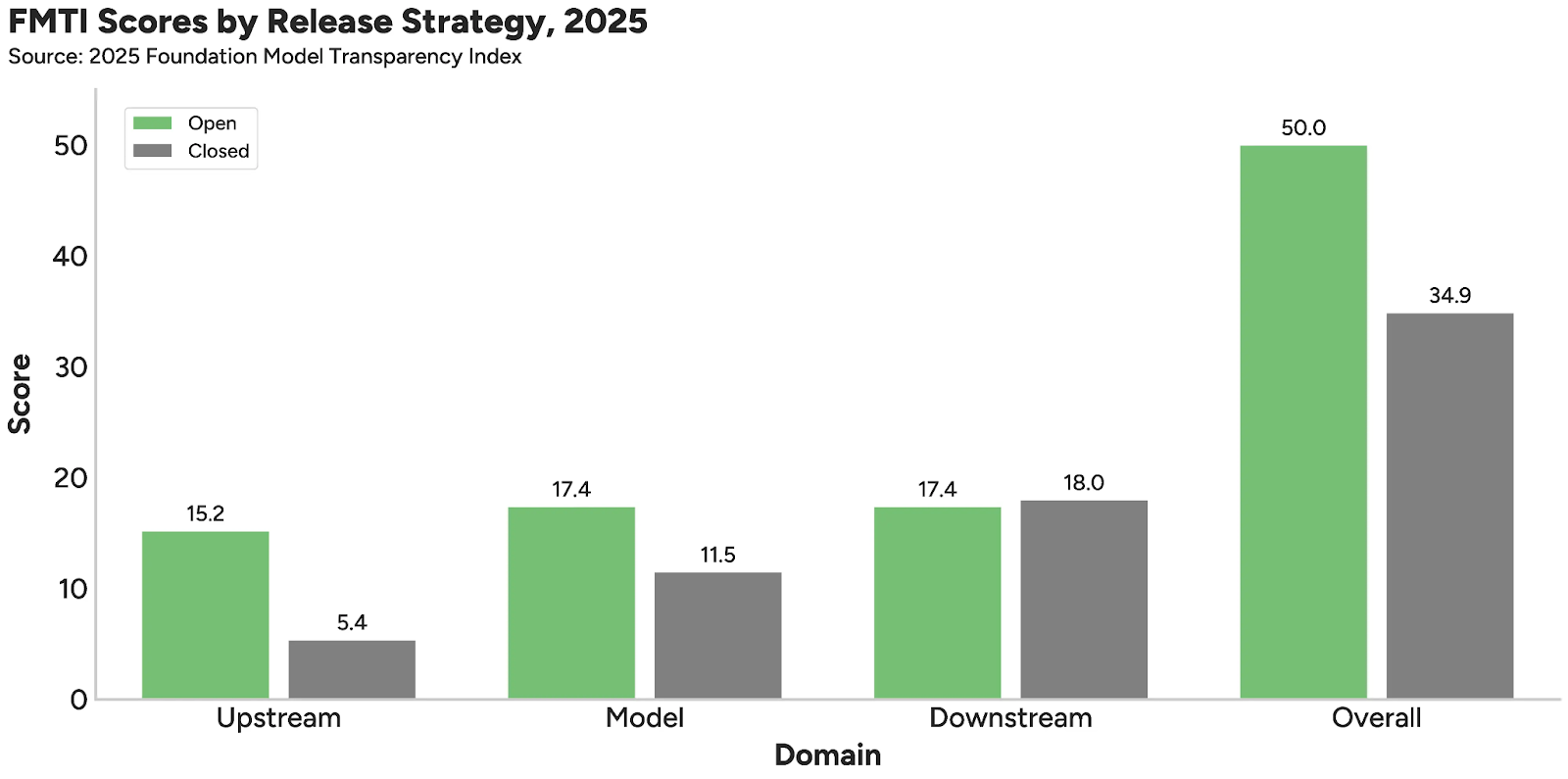

Openness Vs. Transparency

Openness and transparency are intertwined concepts that are often used synonymously. To distinguish the terms, we say a model is open if its weights are publicly available while a company is transparent if it discloses significant information about its practices to the public. The open release of model weights does not guarantee transparency on many topics such as training compute, risk assessment, and downstream use. Empirically, open developers tend to be more transparent than their closed counterparts.

But this aggregate effect is prone to misunderstanding. While two open model developers in IBM and AI21 Labs are very transparent, three of the most influential open developers, DeepSeek, Meta, and Alibaba, are quite opaque. Caution should be exercised in assuming that a developer’s choice to release open model weights will confer broader transparency about company practices or societal impact.

Looking Forward

Sharing information siloed within AI companies is an essential public good to ensure corporate governance, mitigate harms from AI, and promote robust oversight of this cutting edge technology. The overarching need for greater transparency is a top priority for AI policy across many jurisdictions. California and the European Union have passed laws that mandate transparency around the risks of frontier AI. And Dean Ball, former AI adviser at the White House and primary author of America’s AI Action Plan, has proposed transparency measures as a common sense component of AI regulation. The Foundation Model Transparency Index can serve as a beacon for policymakers by identifying both the current information state of the AI industry as well as which areas are more resistant to improvement over time absent policy.

Learn more about the 2025 Foundation Model Transparency Index, explore the transparency reports for each company, or read the report that describes the methods, analyses, and findings.

.jpg&w=256&q=80)

.jpg&w=1920&q=100)